Unified Source-Filter GAN: Unified Source-Filter Network Based On Factorization of Quasi-Periodic Parallel WaveGAN

Reo Yoneyama, Yi-Chiao Wu, Tomoki Toda

Nagoya University

Accepted to InterSpeech 2021

Abstract

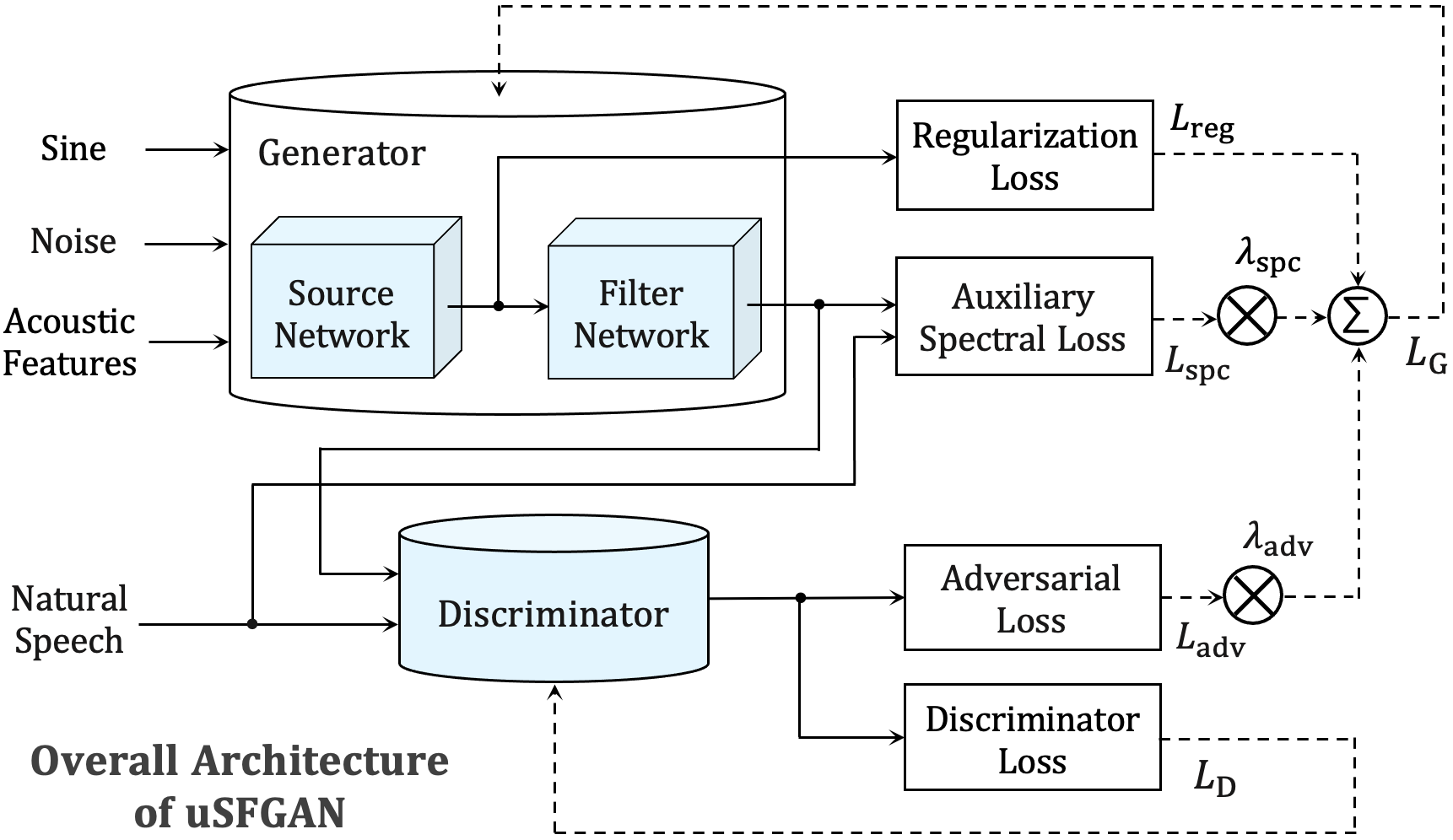

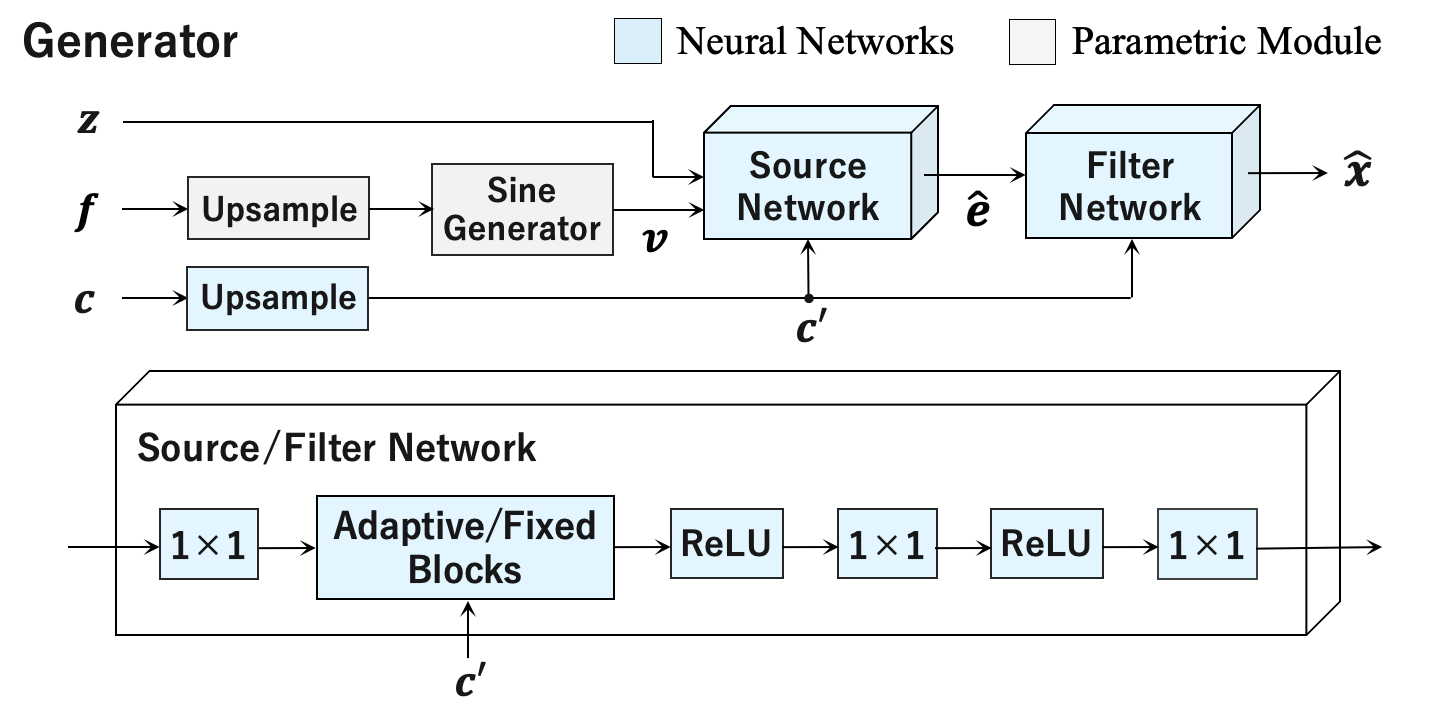

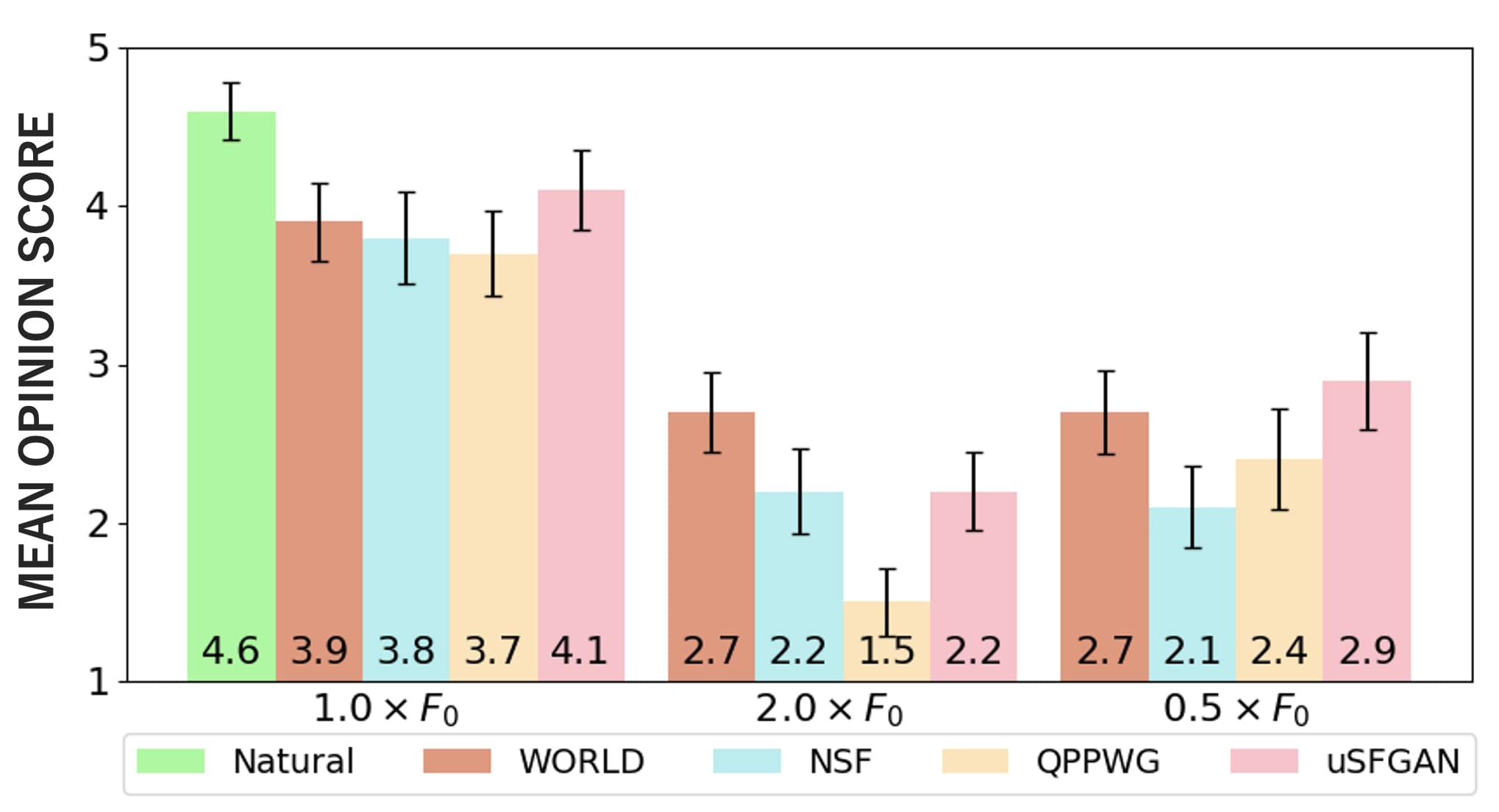

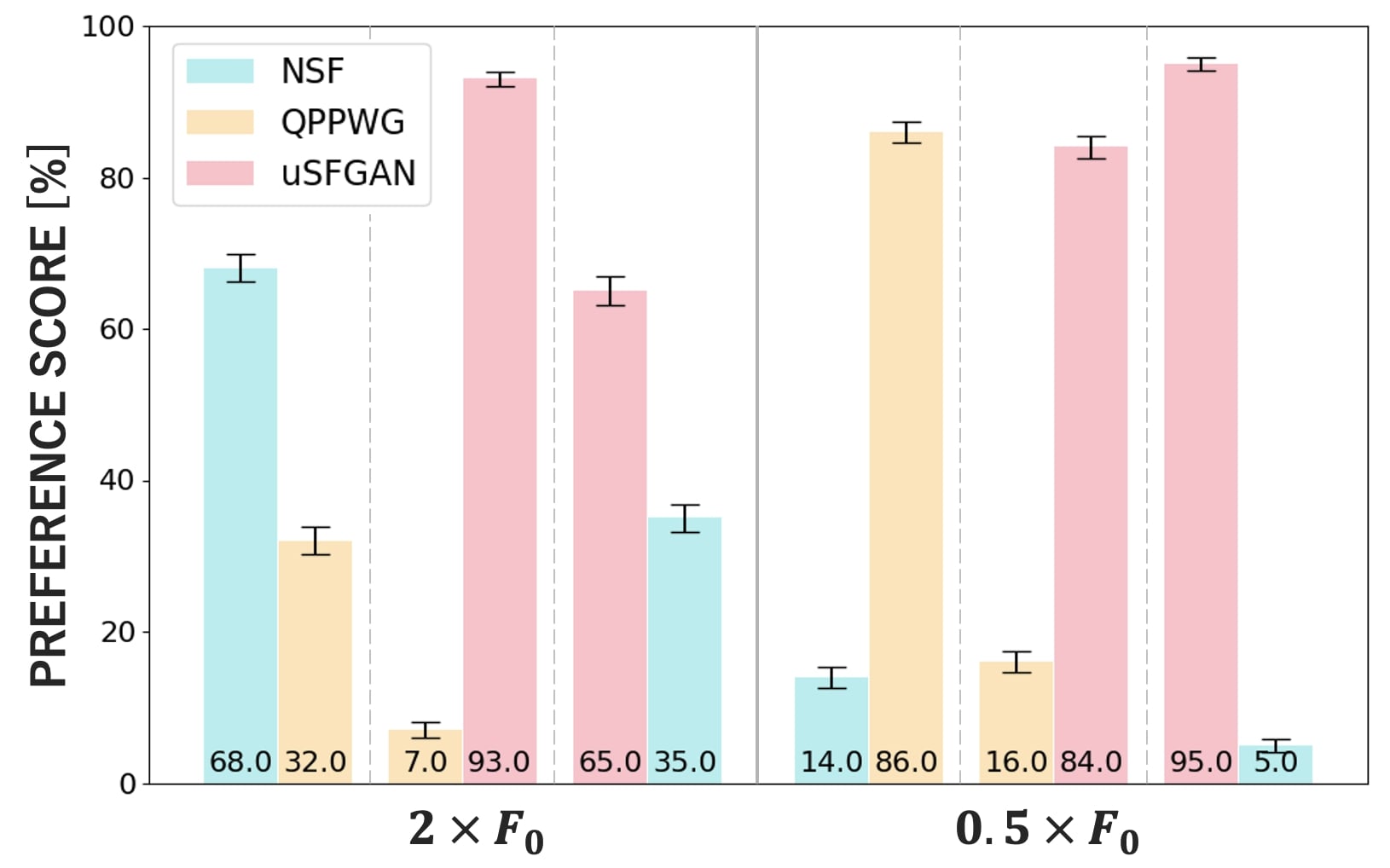

We propose a unified approach to data-driven source-filter modeling using a single neural network for developing a neural vocoder capable of generating high-quality synthetic speech waveforms while retaining flexibility of the source-filter model to control their voice characteristics. Our proposed network called unified source-filter generative adversarial networks (uSFGAN) is developed by factorizing quasi-periodic parallel WaveGAN (QPPWG), one of the neural vocoders based on a single neural network, into a source excitation generation network and a vocal tract resonance filtering network by additionally implementing a regularization loss. Moreover, inspired by neural source filter (NSF), only a sinusoidal waveform is additionally used as the simplest clue to generate a periodic source excitation waveform while minimizing the effect of approximations in the source filter model. The experimental results demonstrate that uSFGAN outperforms conventional neural vocoders, such as QPPWG and NSF in both speech quality and pitch controllability.

[Paper] [Arxiv] [Code]

Demo

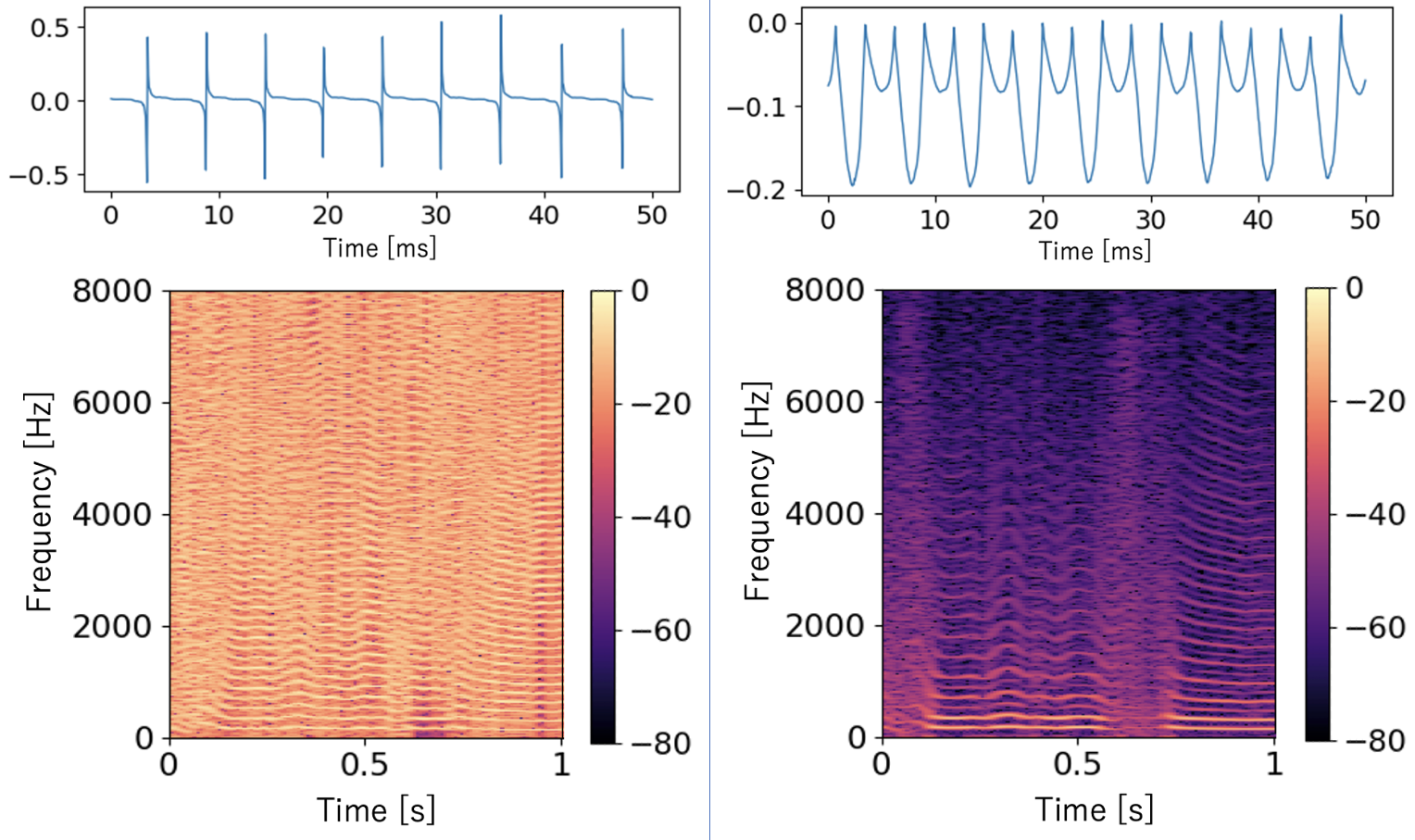

Comparison with baselines on CMU-ARCTIC [1] corpus. The baseline models are WORLD [2], NSF [3] and QP-PWG [4].

Please also see the demo of our new work harmonic-plus-noise uSFGAN here.| Model | Speech | Excitation |

|---|---|---|

| Natural | ||

| WORLD | ||

| NSF | ||

| QP-PWG | ||

| uSFGAN | ||

| uSFGAN w/o regularization loss |

Citation

@inproceedings{yoneyama21_interspeech,

author={Reo Yoneyama and Yi-Chiao Wu and Tomoki Toda},

title={{Unified Source-Filter GAN: Unified Source-Filter Network Based On

Factorization of Quasi-Periodic Parallel WaveGAN}},

year=2021,

booktitle={Proc. Interspeech 2021},

pages={2187--2191},

doi={10.21437/Interspeech.2021-517}

}

References

[1] J. Kominek and A. W. Black, “The CMU ARCTIC speech databases for speech synthesis research,” in Tech. Rep. CMU-LTI03-177, 2003.

[2] M. Morise, F. Yokomori, and K. Ozawa, “WORLD: a vocoderbased high-quality speech synthesis system for real-time applications,” IEICE Transactions on Information and Systems, vol. 99, no. 7, pp. 1877–1884, 2016.

[3] X. Wang, S. Takaki, and J. Yamagishi, “Neural source-filter waveform models for statistical parametric speech synthesis,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 28, pp. 402–415, 2020.

[4] Y.-C. Wu, T. Hayashi, T. Okamoto, H. Kawai, and T. Toda, “Quasi-periodic parallel wavegan: A non-autoregressive raw waveform generative model with pitch-dependent dilated convolution neural network,” IEEE/ACM Transactions on Audio, Speech, and Language Processing, vol. 29, pp. 792–806, 2021.