Unified Source-Filter GAN with Harmonic-plus-Noise Source Excitation Generation

Reo Yoneyama, Yi-Chiao Wu, Tomoki Toda

Nagoya University

Accepted to InterSpeech 2022

Abstract

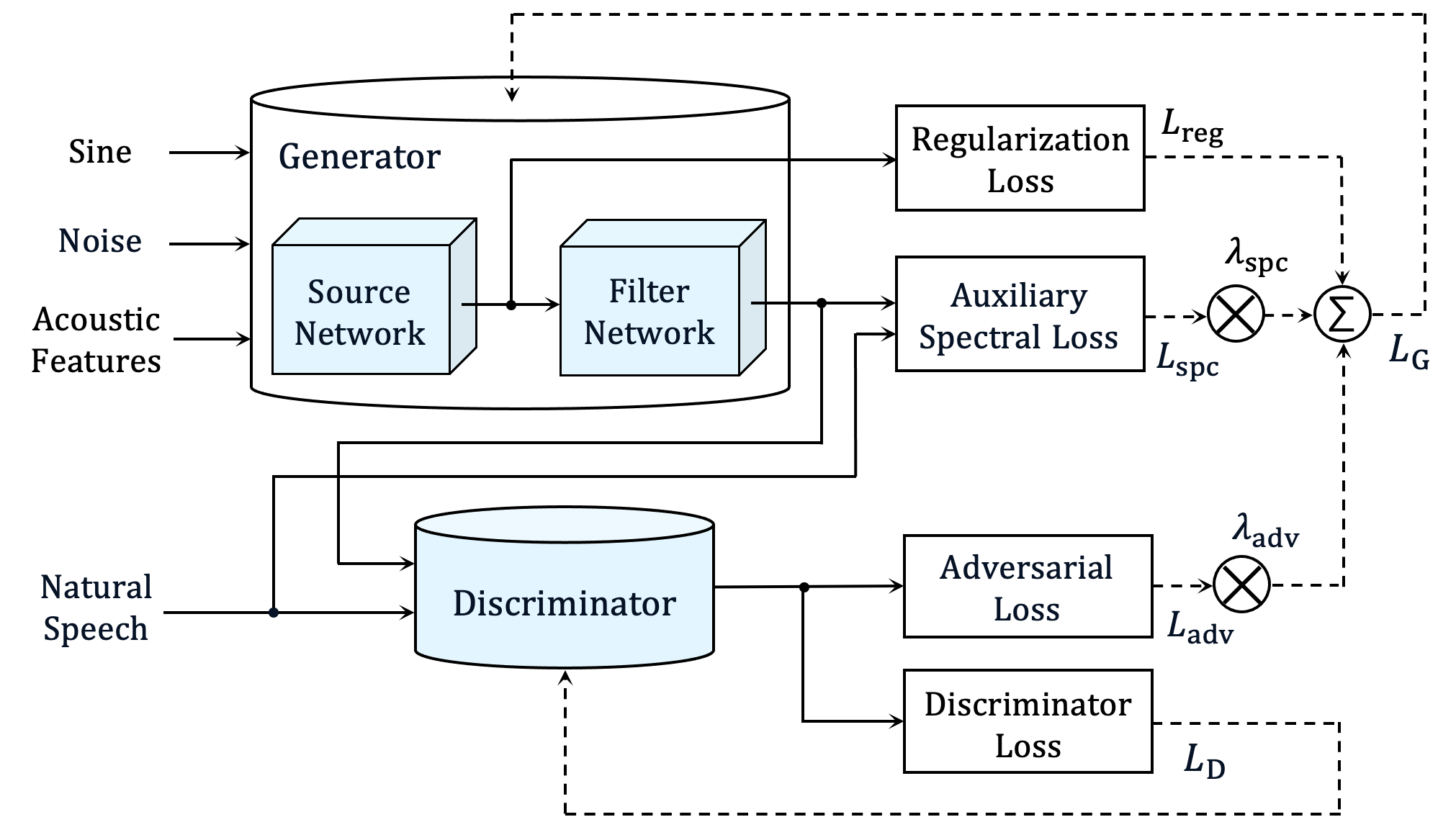

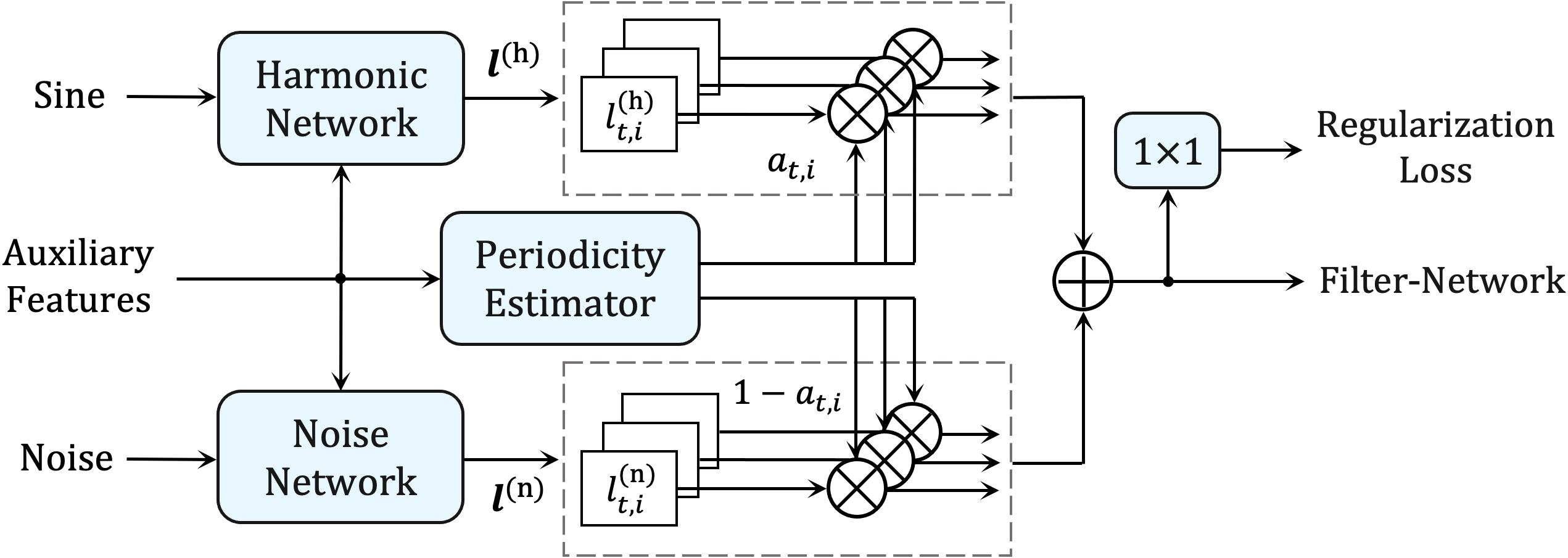

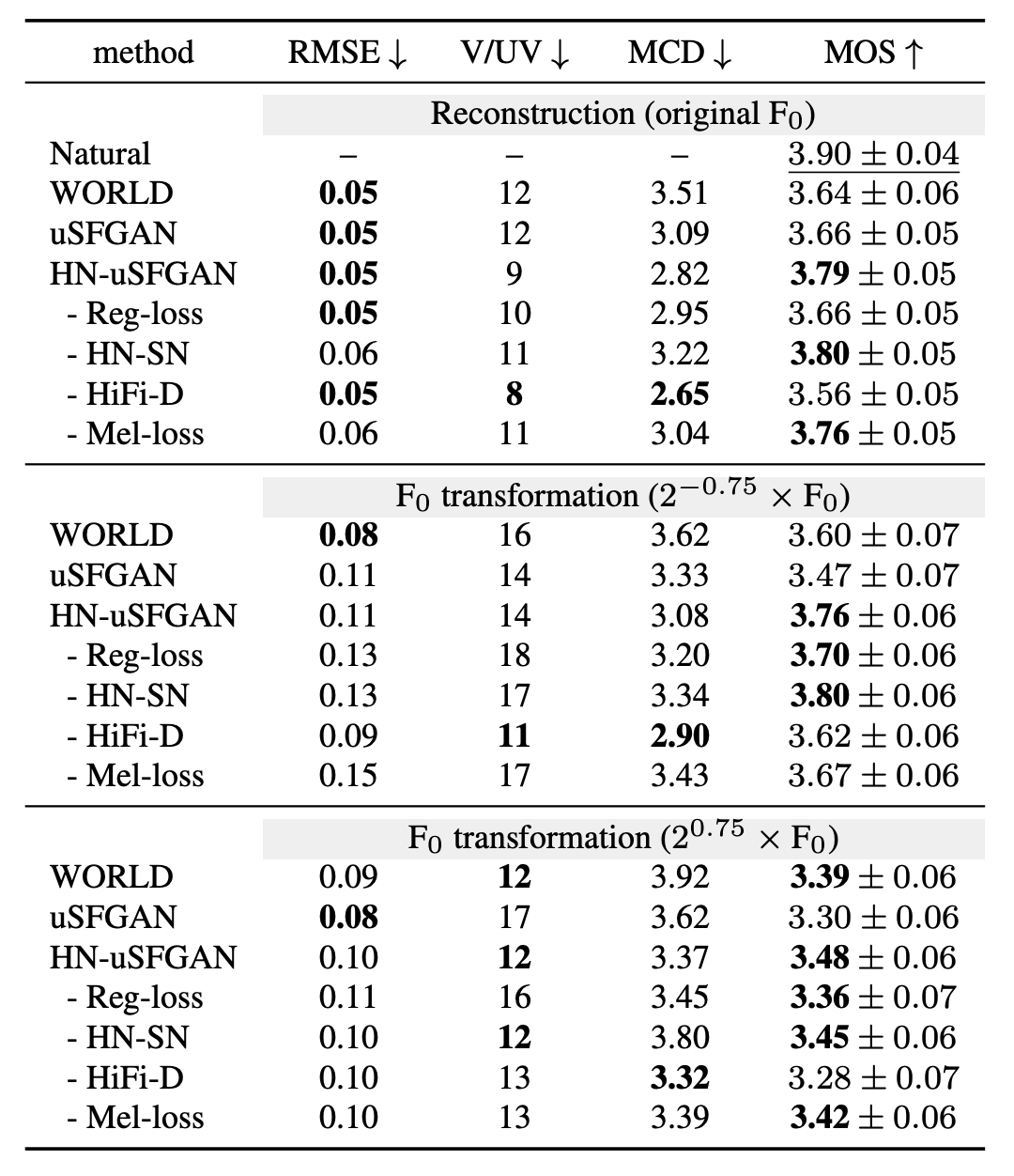

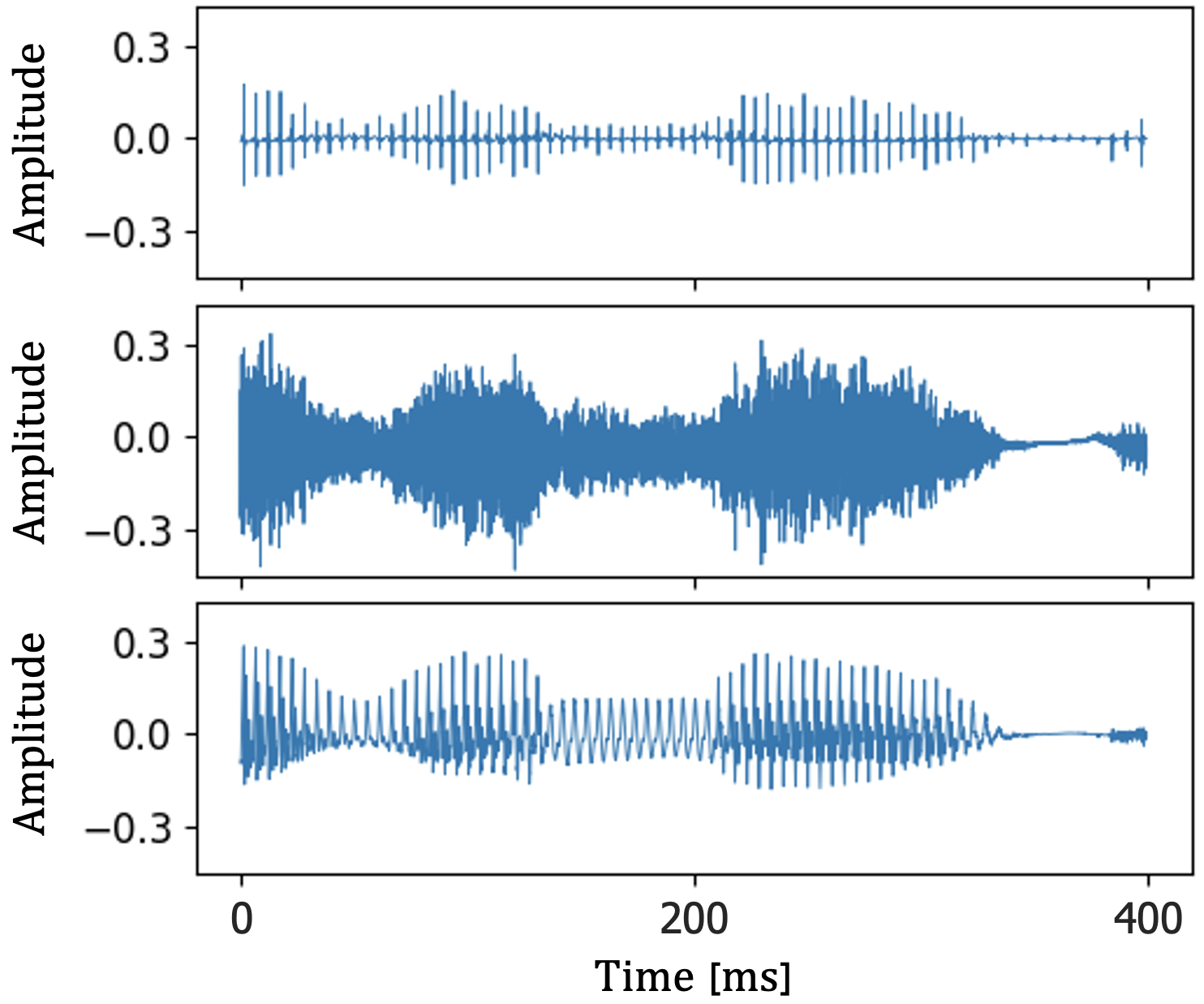

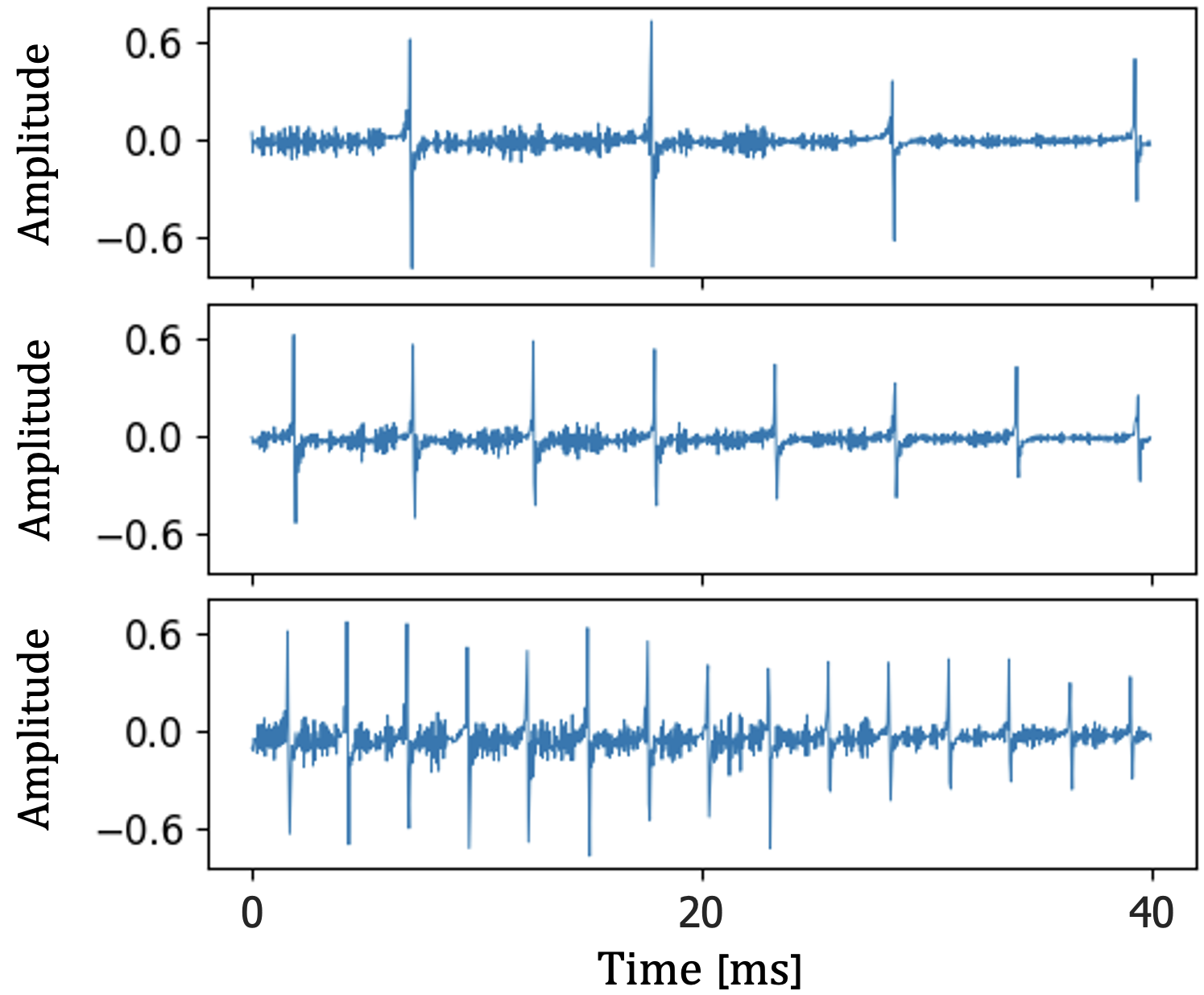

This paper introduces a unified source-filter network with a harmonic-plus-noise source excitation generation mechanism. In our previous work, we proposed unified Source-Filter GAN (uSFGAN) for developing a high-fidelity neural vocoder with flexible voice controllability using a unified source-filter neural network architecture. However, the capability of uSFGAN to model the aperiodic source excitation signal is insufficient, and there is still a gap in sound quality between the natural and generated speech. To improve the source excitation modeling and generated sound quality, a new source excitation generation network separately generating periodic and aperiodic components is proposed. The advanced adversarial training procedure of HiFiGAN is also adopted to replace that of Parallel WaveGAN used in the original uSFGAN. Both objective and subjective evaluation results show that the modified uSFGAN significantly improves the sound quality of the basic uSFGAN while maintaining the voice controllability.

[Paper] [Arxiv] [Code]

Demo

Comparison on VCTK [1] corpus. The speech samples are randomly selected. The baseline models are WORLD [2] and uSFGAN [3]. "- Reg-loss" denotes HN-uSFGAN trained without proposed regularization loss but with the one of uSFGAN. "- HN-SN" denotes HN-uSFGAN without proposed harmonic-plus-noise source excitation generation network but with the one of uSFGAN. "- HiFi-D" denotes HN-uSFGAN trained without the HiFiGAN [4] discriminator but with the Parallel WaveGAN [5] discriminator. "- Mel-loss" denotes HN-uSFGAN trained without mel-spectral L1 loss but with multi-resolution STFT loss [5]. Please also see the demo of our previous work uSFGAN here.

| Model | Reconstruct | F0 x 2-1.00 | F0 x 2-0.75 | F0 x 2-0.50 | F0 x 2-0.25 | F0 x 20.25 | F0 x 20.50 | F0 x 20.75 | F0 x 21.00 |

|---|---|---|---|---|---|---|---|---|---|

| Natural | |||||||||

| WORLD | |||||||||

| uSFGAN | |||||||||

| HN-uSFGAN (Proposed) |

|||||||||

| HN-uSFGAN - Reg-loss |

|||||||||

| HN-uSFGAN - HN-SN |

|||||||||

| HN-uSFGAN - HiFi-D |

|||||||||

| HN-uSFGAN - Mel-loss |

Citation

@inproceedings{yoneyama22_interspeech,

author={Reo Yoneyama and Yi-Chiao Wu and Tomoki Toda},

title={{Unified Source-Filter GAN with Harmonic-plus-Noise

Source Excitation Generation}},

year=2022,

booktitle={Proc. Interspeech 2022},

pages={848--852},

doi={10.21437/Interspeech.2022-11130}

}

References

[1] J. Yamagishi, C. Veaux, and K. MacDonald, “Cstr vctk corpus: English multi-speaker corpus for cstr voice cloning toolkit,” 2019. [Online]. Available: https://doi.org/10.7488/ds/2645

[2] M. Morise, F. Yokomori, and K. Ozawa, “WORLD: a vocoderbased high-quality speech synthesis system for real-time applications,” IEICE Transactions on Information and Systems, vol. 99, no. 7, pp. 1877–1884, 2016.

[3] R. Yoneyama, Y.-C. Wu, and T. Toda, “Unified Source-Filter GAN: Unified Source-Filter Network Based On Factorization of Quasi-Periodic Parallel WaveGAN,” in Proc. Interspeech, 2021, pp. 2187–2191.

[4] J. Kong, J. Kim, and J. Bae, “HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis,” in Proc. NeurIPS, 2020, vol. 33, pp. 17022–17033.

[5] R. Yamamoto, E. Song, and J.-M. Kim, “Parallel WaveGAN: A fast waveform generation model based on generative adversarial networks with multi-resolution spectrogram,” in Proc. ICASSP, 2020, pp. 6199–6203.