Source-Filter HiFi-GAN: Fast and Pitch Controllable High-Fidelity Neural Vocoder

Reo Yoneyama1, Yi-Chiao Wu2, and Tomoki Toda1

1Nagoya University, Japan, 2Meta Reality Labs Research, USA

Accepted to ICASSP 2023

Abstract

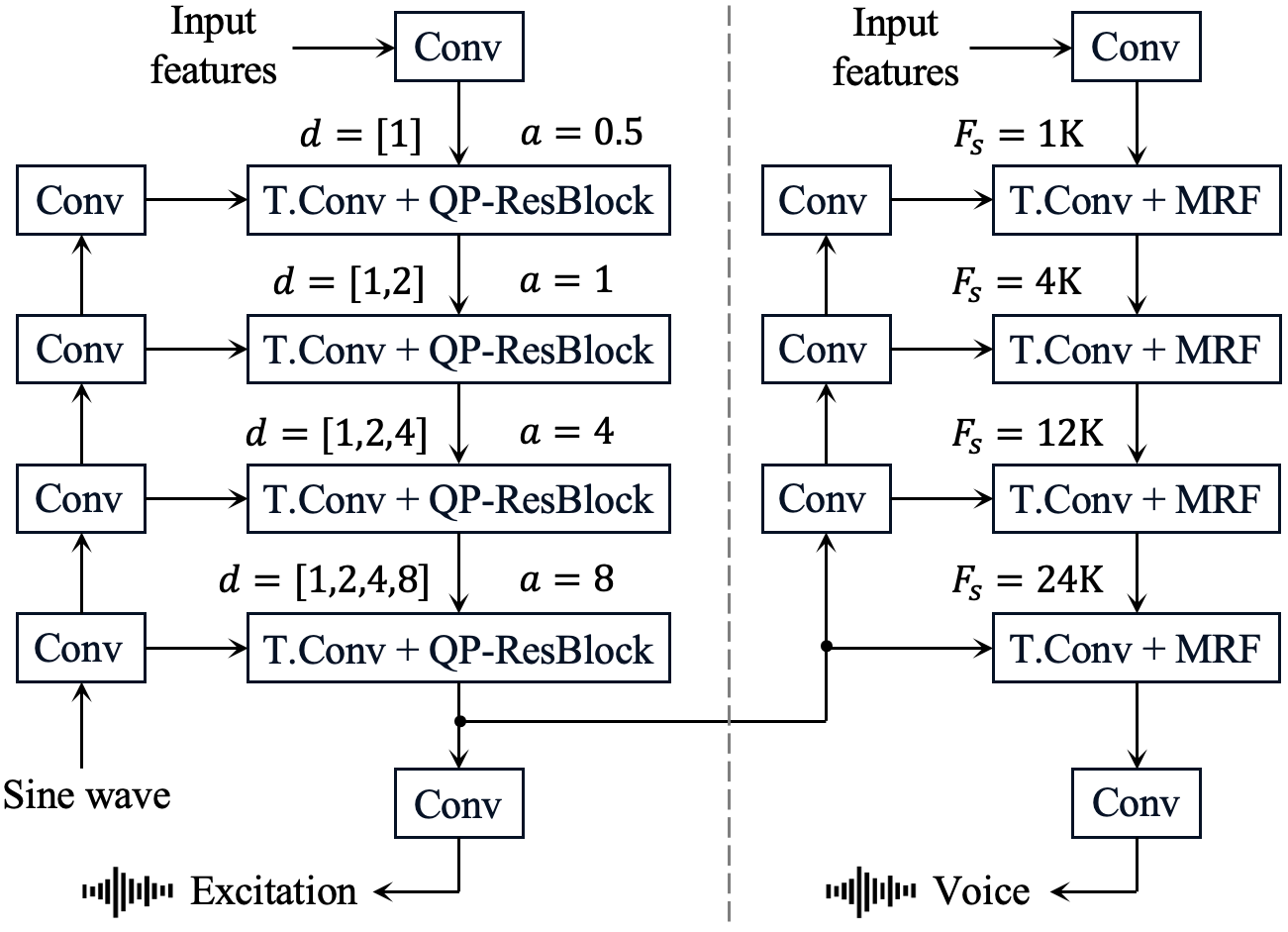

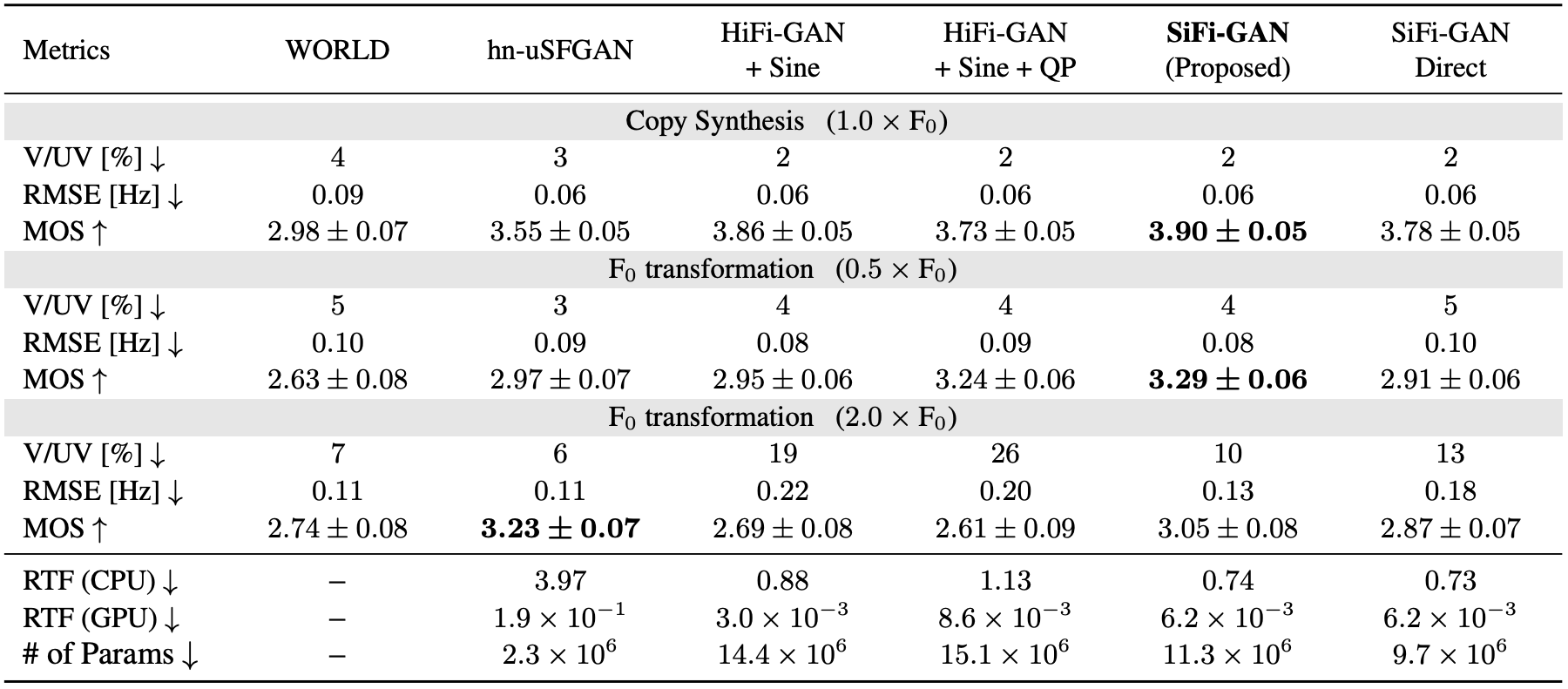

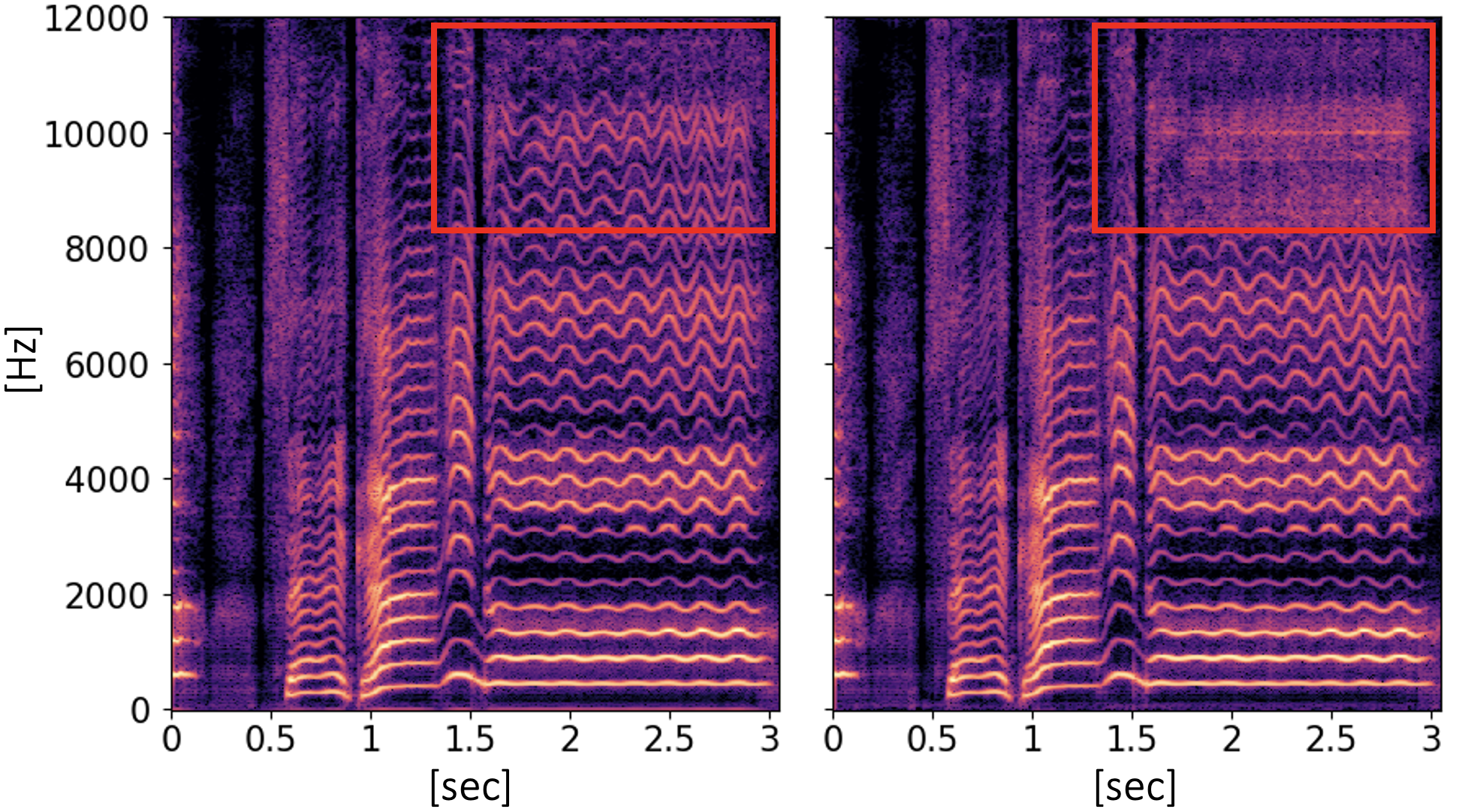

Our previous work, the unified source-filter GAN (uSFGAN) vocoder, introduced a novel architecture based on the source-filter theory into the parallel waveform generative adversarial network to achieve high voice quality and pitch controllability. However, the high temporal resolution inputs result in high computation costs. Although the HiFi-GAN vocoder achieves fast high-fidelity voice generation thanks to the efficient upsampling-based generator architecture, the pitch controllability is severely limited. To realize a fast and pitch-controllable high-fidelity neural vocoder, we introduce the source-filter theory into HiFi-GAN by hierarchically conditioning the resonance filtering network on a well-estimated source excitation information. According to the experimental results, our proposed method outperforms HiFi-GAN and uSFGAN on a singing voice generation in voice quality and synthesis speed on a single CPU. Furthermore, unlike the uSFGAN vocoder, the proposed method can be easily adopted/integrated in real-time applications and end-to-end systems.

[Paper] [Arxiv] [Code]

Demo

Only Namine Ritsu [3] database is used for the training. All vocoders are conditioned on WORLD [4] features.

All provided samples are unseen songs in the training.

Model details

- WORLD : A conventional source-filter vocoder [4].

- hn-uSFGAN : Harmonic-plus-noise unified source-filter GAN [5]. Please check the DEMO for more information.

- HiFi-GAN : The vanilla HiFi-GAN (V1) [1] conditioned on the WORLD features.

- HiFi-GAN + Sine : HiFi-GAN (V1) conditioned on the WORLD features and the sine embedding through downsampling CNNs [6-8].

- HiFi-GAN + Sine + QP : Extended HiFi-GAN + Sine model by inserting QP-ResBlocks after each transposed CNN.

- SiFi-GAN : Proposed source-filter HiFi-GAN.

- SiFi-GAN Direct : SiFi-GAN without 2nd downsampling CNNs. In this model, the source excitation representations from each QP-ResBlock are directly fed to filter-network at the corresponding temporal resolution without passing downsampling CNNs.

| Model | Copy Synthesis | F0 x 2-1.0 | F0 x 2-0.5 | F0 x 20.5 | F0 x 21.0 |

|---|---|---|---|---|---|

| Natural | |||||

| WORLD | |||||

| hn-uSFGAN | |||||

| HiFi-GAN | |||||

| HiFi-GAN + Sine |

|||||

| HiFi-GAN + Sine + QP |

|||||

| SiFi-GAN (Proposed) |

|||||

| SiFi-GAN Direct |

Citation

@INPROCEEDINGS{10095298,

author={Yoneyama, Reo and Wu, Yi-Chiao and Toda, Tomoki},

title={{Source-Filter HiFi-GAN: Fast and Pitch Controllable High-Fidelity Neural

Vocoder}},

booktitle={IEEE International Conference on Acoustics, Speech and

Signal Processing},

year = {2023},

volume={},

number={},

pages={1-5},

doi={10.1109/ICASSP49357.2023.10095298}}

}

Acknowledgements

References

[1] J. Kong, J. Kim, and J. Bae, “HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis,” in Advances in NeurIPS, 2020, vol. 33, pp. 17022–17033.

[2] Y.-C. Wu, T. Hayashi, T. Okamoto, H. Kawai, and T. Toda, “QuasiPeriodic Parallel WaveGAN: A Non-Autoregressive Raw Waveform Generative Model With PitchDependent Dilated Convolution Neural Network,” IEEE/ACM TASLP, vol. 29, pp. 792–806, 2021.

[3] Canon, “[NamineRitsu] Blue (YOASOBI) [ENUNU model Ver.2, Singing DBVer.2 release],” https://www.youtube.com/watch?v=pKeo9IE_L1I.

[4] M. Morise, F. Yokomori, and K. Ozawa, “WORLD: a vocoderbased high-quality speech synthesis system for real-time applications,” IEICE Transactions on Information and Systems, vol. 99, no. 7, pp. 1877–1884, 2016.

[5] R. Yoneyama, Y.-C. Wu, and T. Toda, “Unified SourceFilter GAN with Harmonic-plus-Noise Source Excitation Generation,” in Proc. Interspeech, 2022, pp. 848–852.

[6] K. Matsubara, T. Okamoto, R. Takashima, T. Takiguchi, T. Toda, and H. Kawai, “Period-HiFi-GAN: Fast and fundamental frequency controllable neural vocoder,” in Proc. Acoustical Society of Japan, in Japanese, Mar. 2022, pp. 901–904.

[7] S. Shimizu, T. Okamoto, R. Takashima, T. Takiguchi, T. Toda, H. Kawai, “Initial investigation of fundamental frequency controllable HiFi-GAN conditioned on mel-spectrogram,” in Acoustical Society of Japan, Sep. 2022, pp. 1137–1140.

[8] K. Matsubara, T. Okamoto, R. Takashima, T. Takiguchi, T. Toda, H. Kawai, “Hamonic-Net+: Fundamental frequency controllable fast neural vocoder with harmonic wave input and Layerwise-Quasi-Periodic CNNs,” in Acoustical Society of Japan, Sep. 2022, pp. 1133–1136.