High-Fidelity and Pitch-Controllable Neural Vocoder Based on Unified Source-Filter Networks

Reo Yoneyama1, Yi-Chiao Wu2, and Tomoki Toda1

1Nagoya University, Japan, 2Meta Reality Labs Research, USA

Accepted to IEEE/ACM Trans. ASLP

Abstract

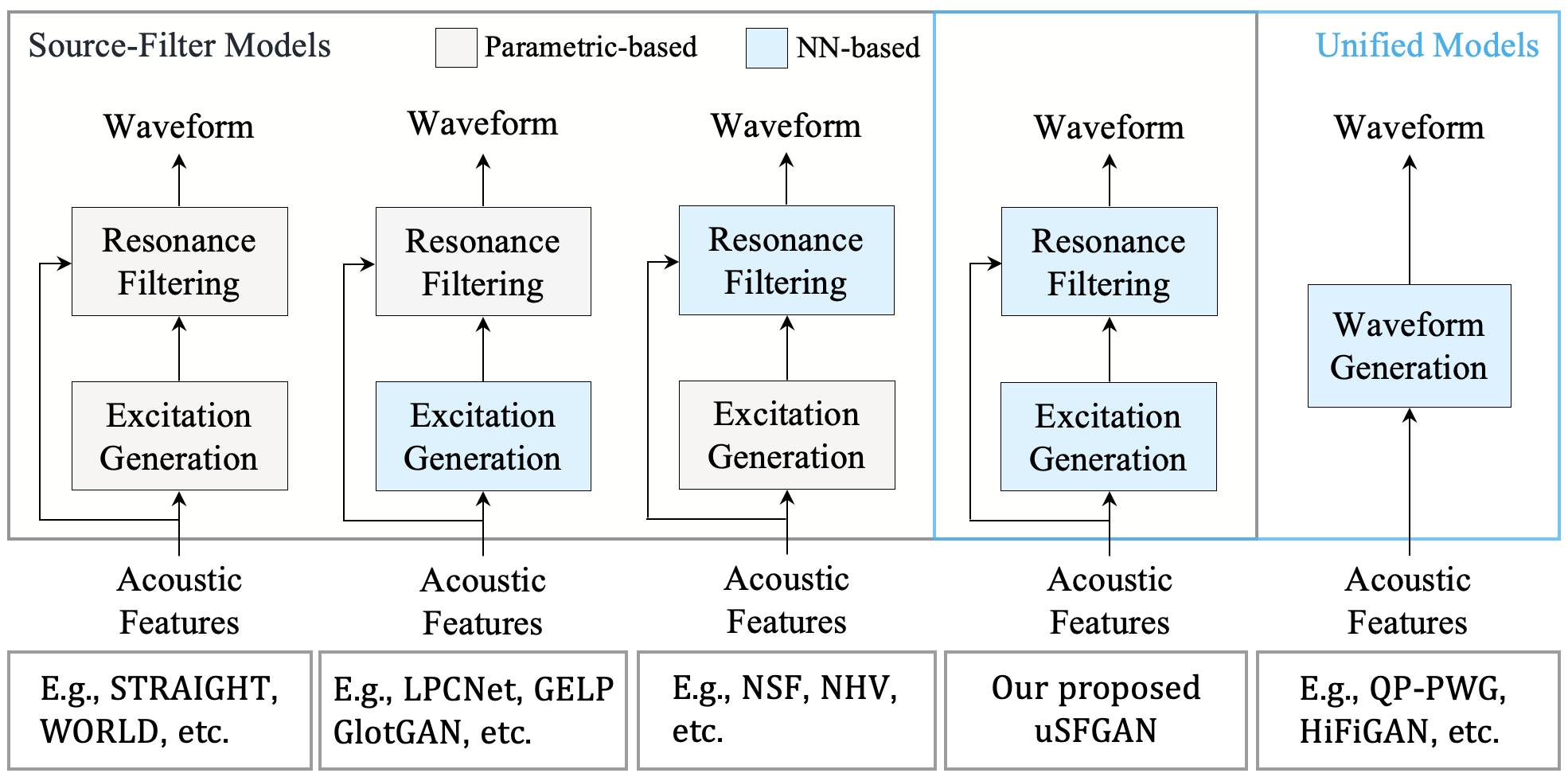

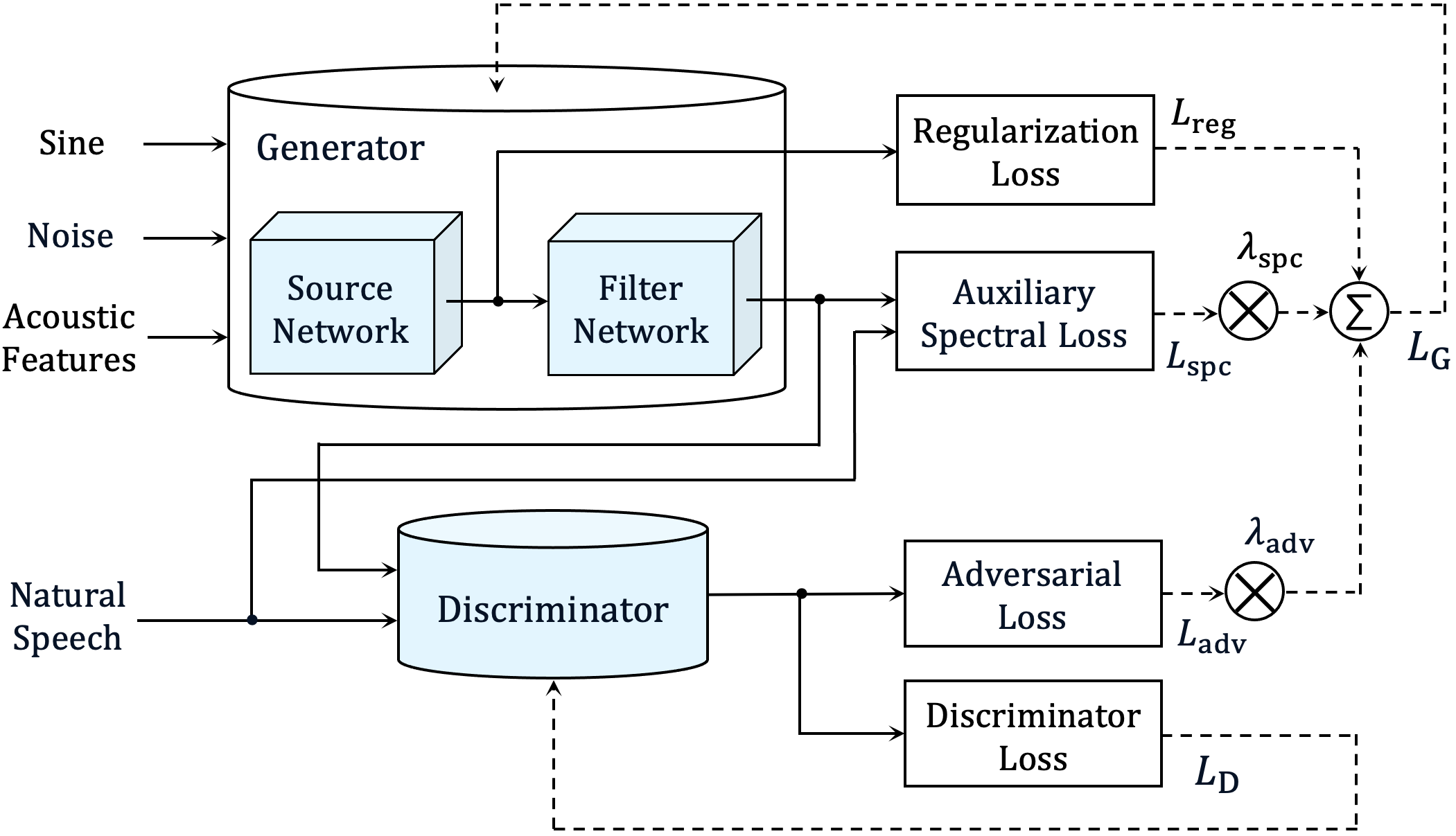

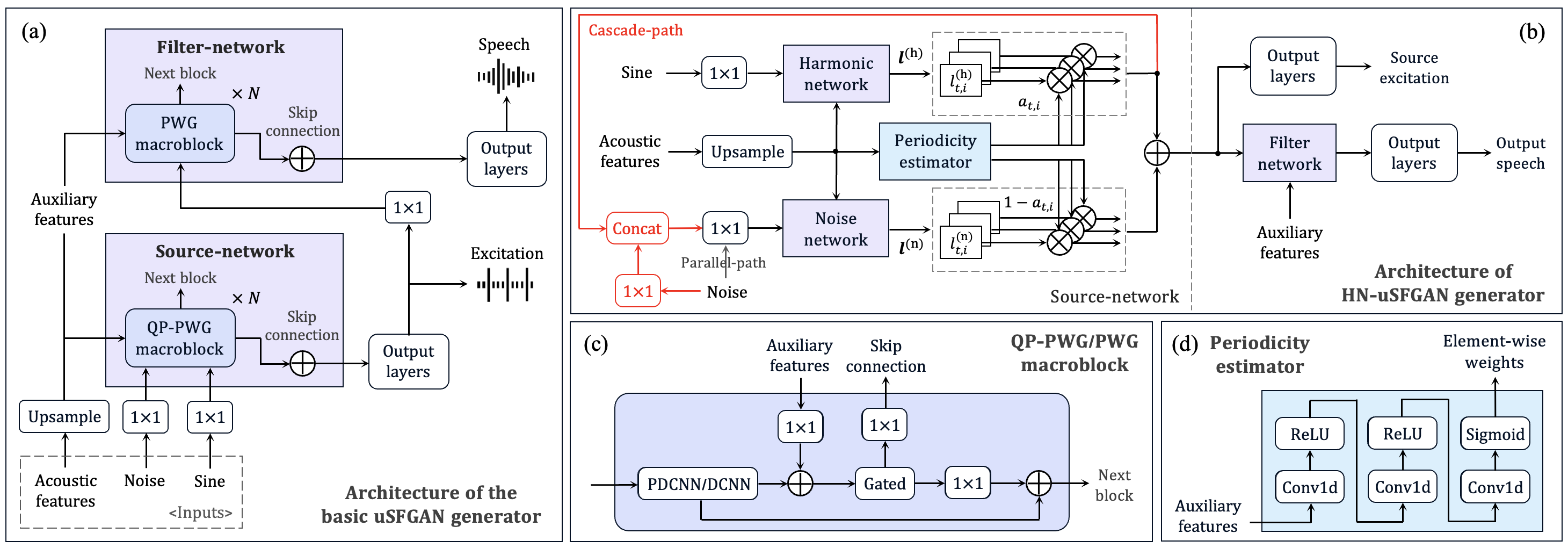

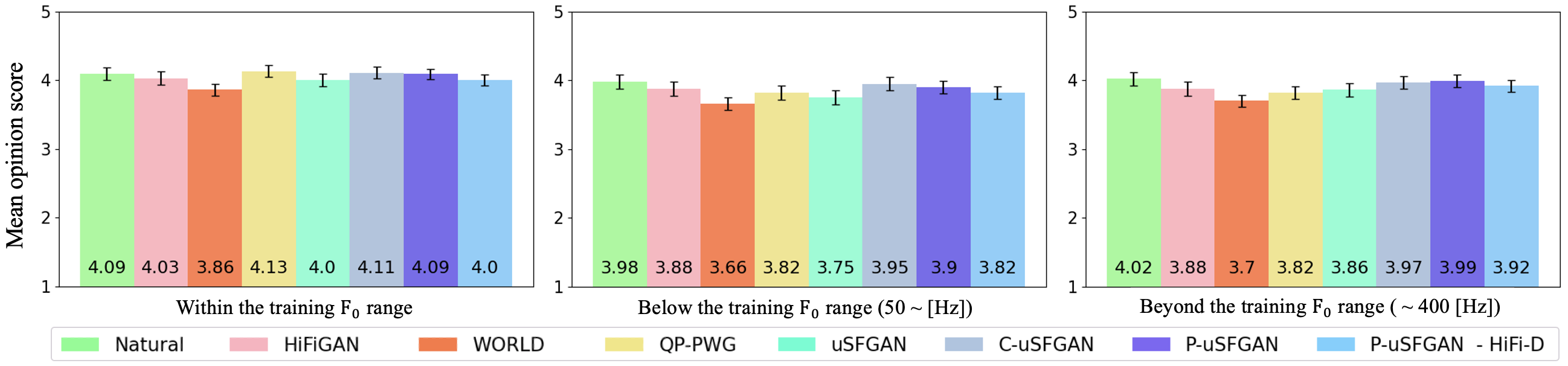

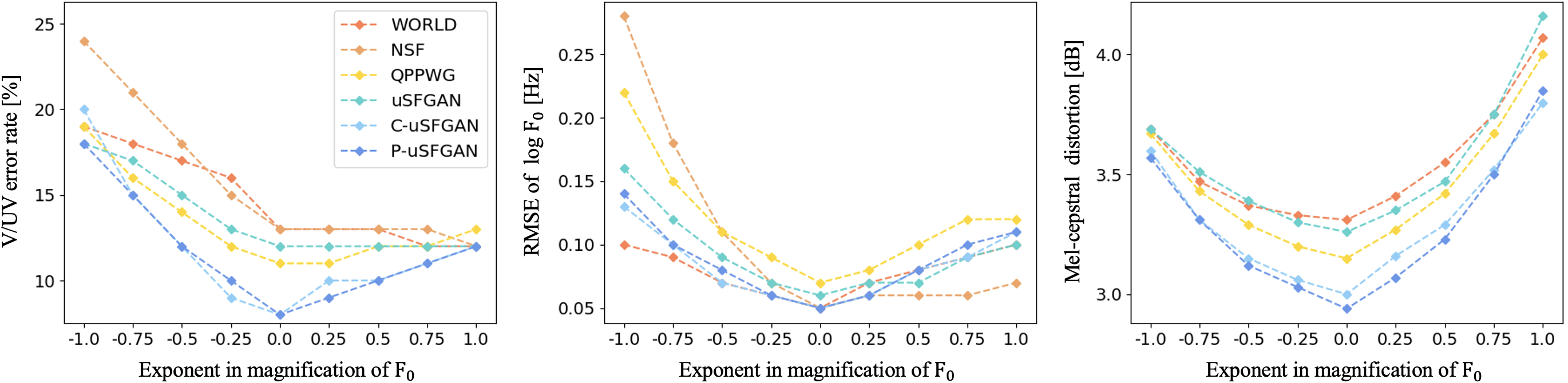

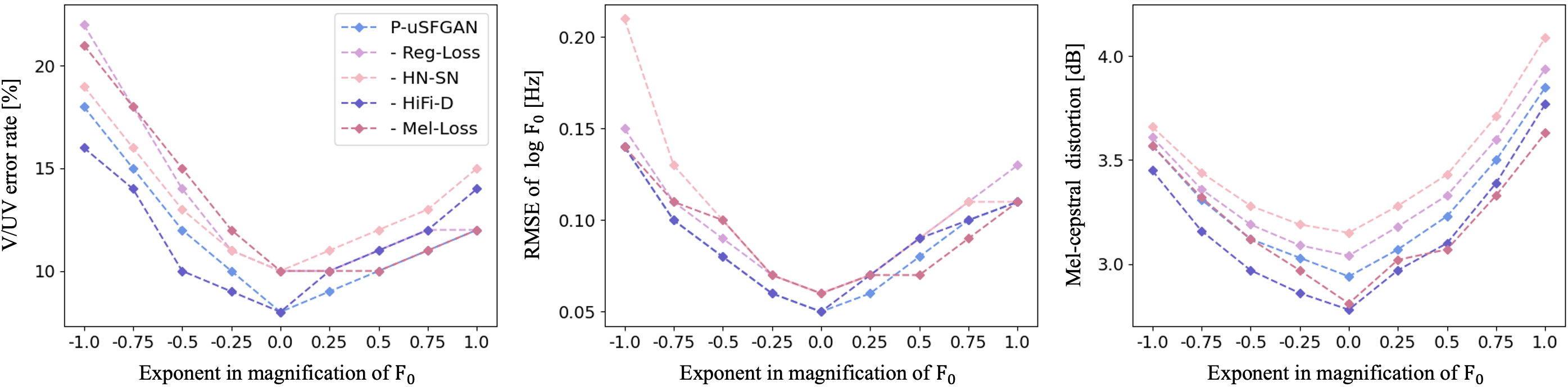

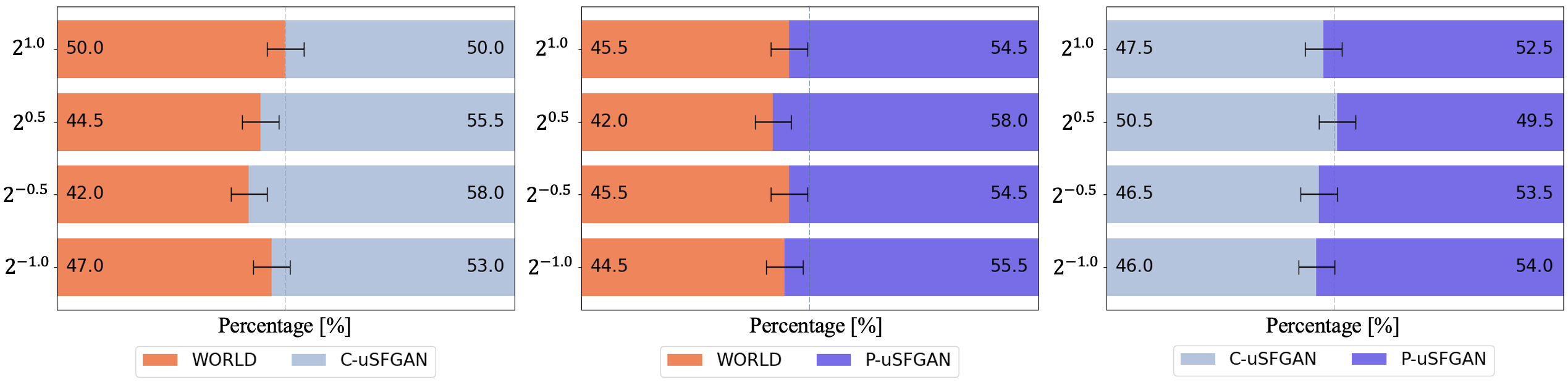

We introduce unified source-filter generative adversarial networks (uSFGAN), a waveform generative model conditioned on acoustic features, which represents the source-filter architecture in a generator network. Unlike the previous neural-based source-filter models in which parametric signal process modules are combined with neural networks, our approach enables unified optimization of both the source excitation generation and resonance filtering parts to achieve higher sound quality. In the uSFGAN framework, several specific regularization losses are proposed to enable the source excitation generation part to output reasonable source excitation signals. Both objective and subjective experiments are conducted, and the results demonstrate that the proposed uSFGAN achieves comparable sound quality to HiFi-GAN in the speech reconstruction task and outperforms WORLD in the F0 transformation task. Moreover, we argue that the F0-driven mechanism and the inductive bias obtained by source-filter modeling improve the robustness against unseen F0 in training as shown by the results of experimental evaluations.

[Paper] [Code]

Demo

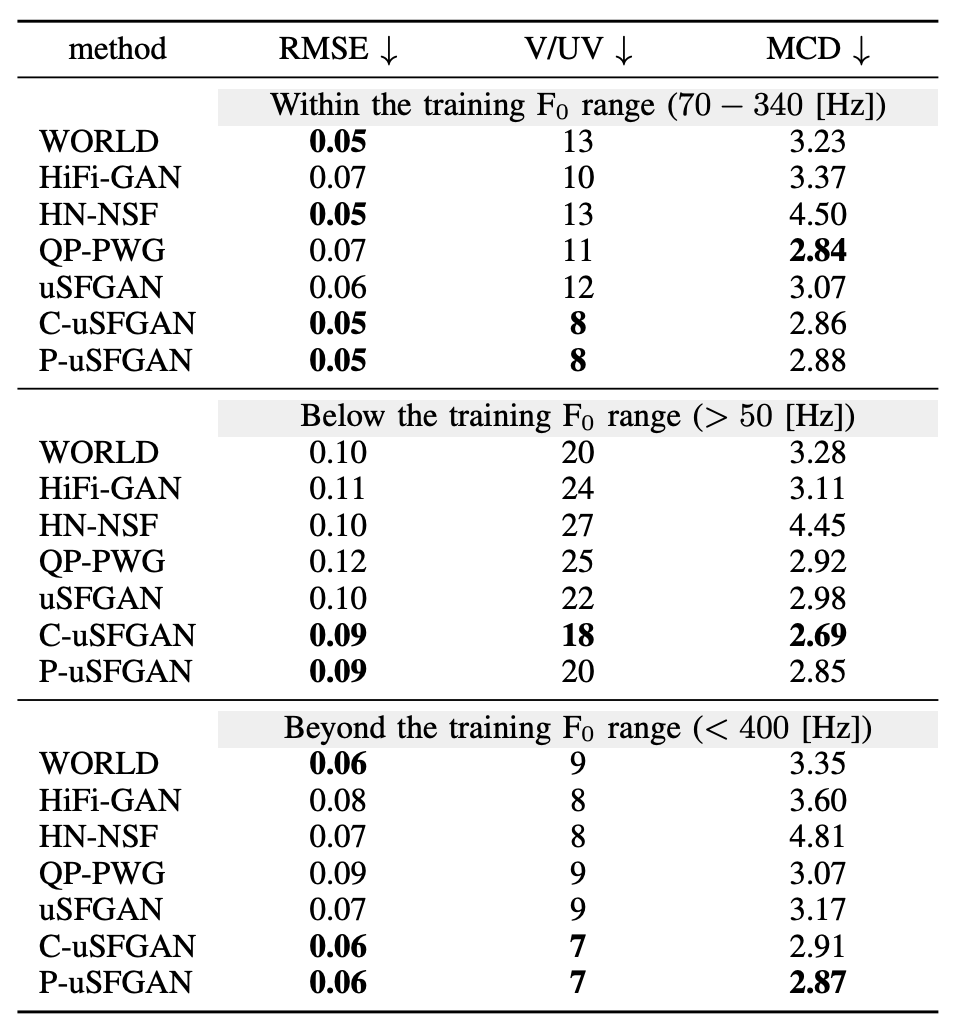

Only VCTK [1] dataset is used for the training. We limited the F0 range of the training data from 70 Hz to 340

Hz to evaluate robustness to unseen F0 values. The default auxiliary features used for conditioning are

mel-generalized cepstrum (MGC) and mel-cepstral aperiodicity (MAP) extracted using WORLD [2].

All provided samples are randomly chosen.

Baselines

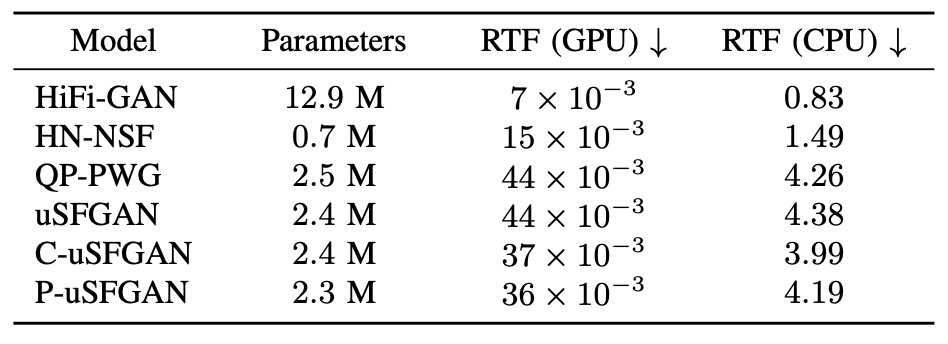

- HiFi-GAN : The vanilla HiFi-GAN (V1) [3] conditioned on mel-spectrogram.

- WORLD : A conventional source-filter vocoder [2].

- QP-PWG : Quasi-periodic prallel waveGAN [4]. Please check the official demo for more information.

- uSFGAN : Basic unified source-filter GAN [5].

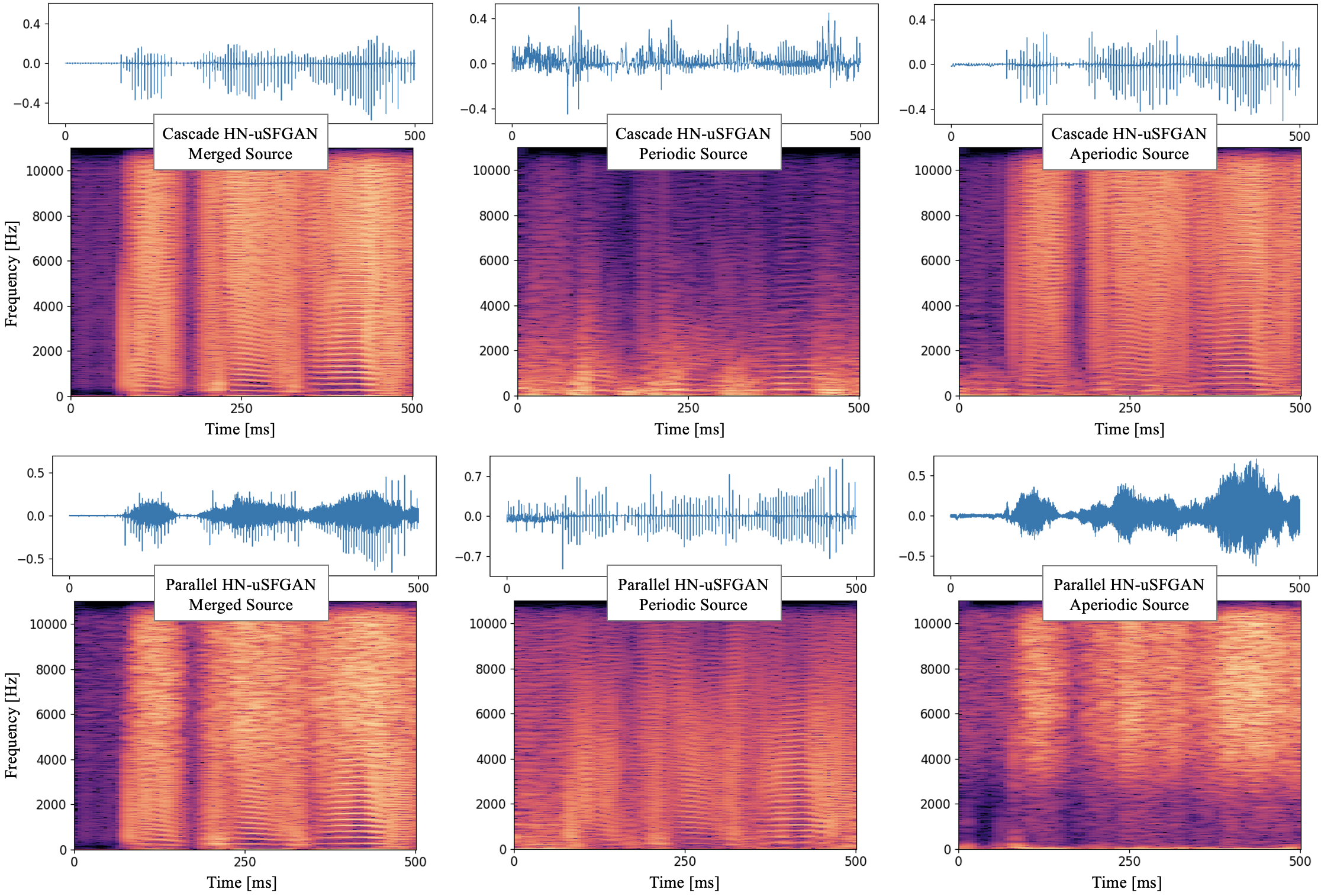

- C-uSFGAN : Cascade harmonic-plus-noise uSFGAN .

- P-uSFGAN : Parallel harmonic-plus-noise uSFGAN modified from [6].

- P-uSFGAN - Reg-loss : P-uSFGAN trained with the spectral envelope flattening loss [5] instead of the residual spectra targeting loss [6].

- P-uSFGAN - HN-SN: P-uSFGAN without the parallel harmonic-plusnoise source network but with the generator of the basic uSFGAN.

- P-uSFGAN - HiFi-D: P-uSFGAN without the multi-period or multiscale discriminator of HiFi-GAN but with the discriminator of parallel waveGAN [7].

- P-uSFGAN - Mel-loss : P-uSFGAN trained with the multi-resolution STFT loss [7] instead of the mel-spectral L1 loss.

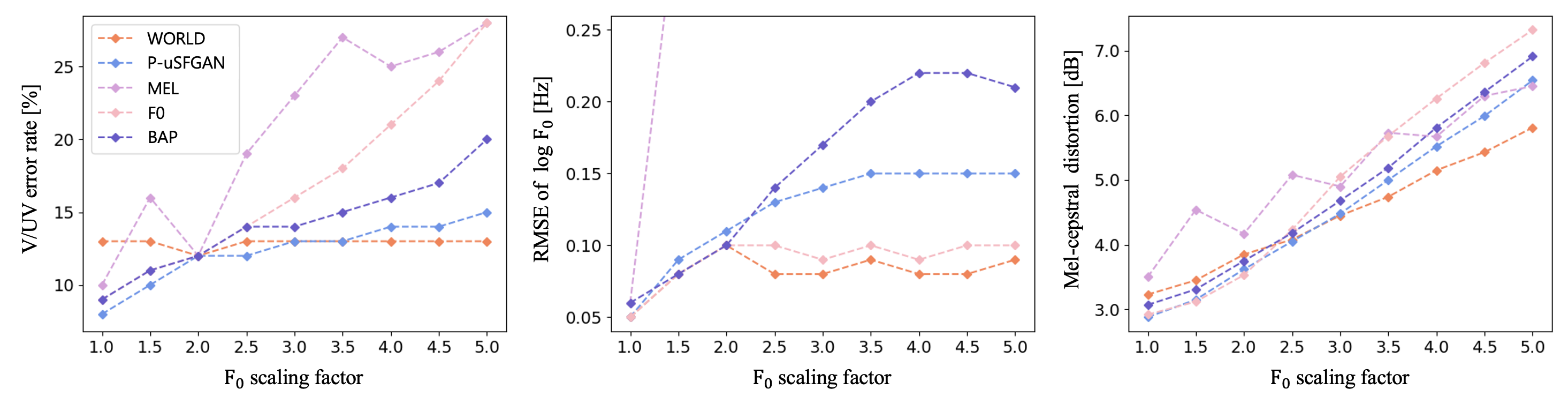

- P-uSFGAN w/ MEL: P-uSFGAN conditioned on mel-spectrogram instead of WORLD features.

- P-uSFGAN w/ F0: P-uSFGAN conditioned on F0 in addition to the default set of the auxiliary features.

- P-uSFGAN w/ BAP: P-uSFGAN conditioned on band-aperiodicity (BAP) instead of MAP.

| Model | Copy Synthesis | F0 x 2-2.0 | F0 x 2-1.0 | F0 x 2-0.75 | F0 x 2-0.5 | F0 x 2-0.25 | F0 x 20.25 | F0 x 20.5 | F0 x 20.75 | F0 x 21.0 | F0 x 22.0 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| Natural | |||||||||||

| HiFi-GAN | |||||||||||

| WORLD | |||||||||||

| QP-PWG | |||||||||||

| uSFGAN | |||||||||||

| C-uSFGAN | |||||||||||

| P-uSFGAN | |||||||||||

| P-uSFGAN - Reg-loss |

|||||||||||

| P-uSFGAN - HN-SN |

|||||||||||

| P-uSFGAN - HiFi-D |

|||||||||||

| P-uSFGAN - Mel-loss |

|||||||||||

| P-uSFGAN w/ MEL |

|||||||||||

| P-uSFGAN w/ F0 |

|||||||||||

| P-uSFGAN w/ BAP |

Citation

@ARTICLE{10246854,

author={Yoneyama, Reo and Wu, Yi-Chiao and Toda, Tomoki},

journal={IEEE/ACM Transactions on Audio, Speech, and Language Processing},

title={High-Fidelity and Pitch-Controllable Neural Vocoder Based on Unified

Source-Filter Networks},

year={2023},

volume={},

number={},

pages={1-13},

doi={10.1109/TASLP.2023.3313410}

}

References

[1] J. Yamagishi, C. Veaux, and K. MacDonald, “CSTR VCTK Corpus: English Multi-speaker Corpus for CSTR Voice Cloning Toolkit,” 2019.

[2] M. Morise, F. Yokomori, and K. Ozawa, “WORLD: a vocoderbased high-quality speech synthesis system for real-time applications,” IEICE Transactions on Information and Systems, vol. 99, no. 7, pp. 1877-1884, 2016.

[3] J. Kong, J. Kim, and J. Bae, “HiFi-GAN: Generative Adversarial Networks for Efficient and High Fidelity Speech Synthesis,” in Proc. NeurIPS, 2020, vol. 33, pp. 17022-17033.

[4] Y.-C. Wu, T. Hayashi, T. Okamoto, H. Kawai, and T. Toda, “QuasiPeriodic Parallel WaveGAN: A Non-Autoregressive Raw Waveform Generative Model With PitchDependent Dilated Convolution Neural Network,” IEEE/ACM TASLP, vol. 29, pp. 792-806, 2021.

[5] R. Yoneyama, Y.-C. Wu, and T. Toda, “Unified SourceFilter GAN with Harmonic-plus-Noise Source Excitation Generation,” in Proc. Interspeech, 2022, pp. 848-852.

[6] R. Yoneyama, Y.-C. Wu, and T. Toda, “Unified Source-Filter GAN with Harmonic-plus-Noise Source Excitation Generation,” in Proc. Interspeech, 2022, pp. 848–852.

[7] R. Yamamoto, E. Song, and J.-M. Kim, “Parallel Wavegan: A Fast Waveform Generation Model Based on Generative Adversarial Networks with Multi-Resolution Spectrogram,” in Proc. ICASSP, 2020, pp. 6199-6203.