Nonparallel High-Quality Audio Super Resolution with Domain Adaptation and Resampling CycleGANs

Reo Yoneyama1*, Ryuichi Yamamoto2, and Kentaro Tachibana2

1Nagoya University, Japan, 2LINE Corporation, Japan

*Work performed during an internship at LINE Corporation.

Accepted to ICASSP 2023

Abstract

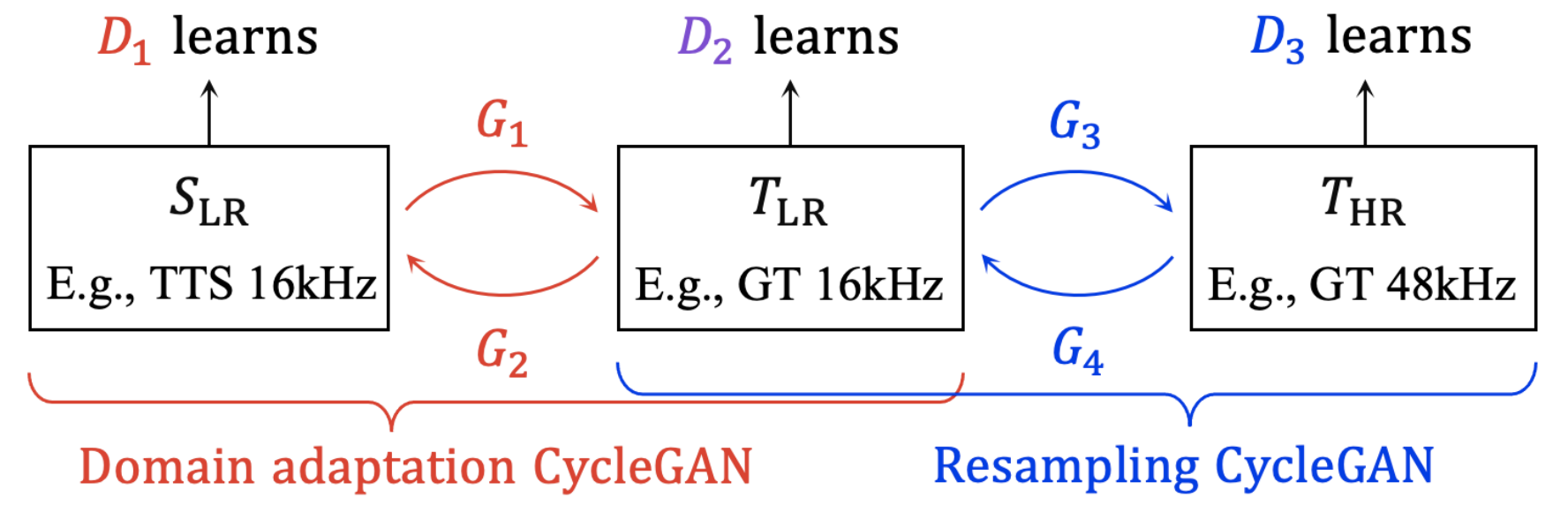

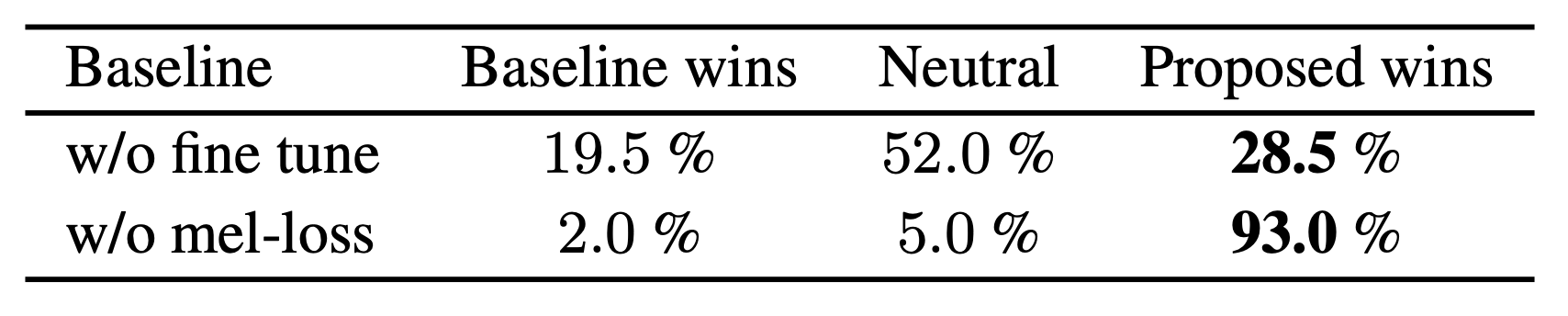

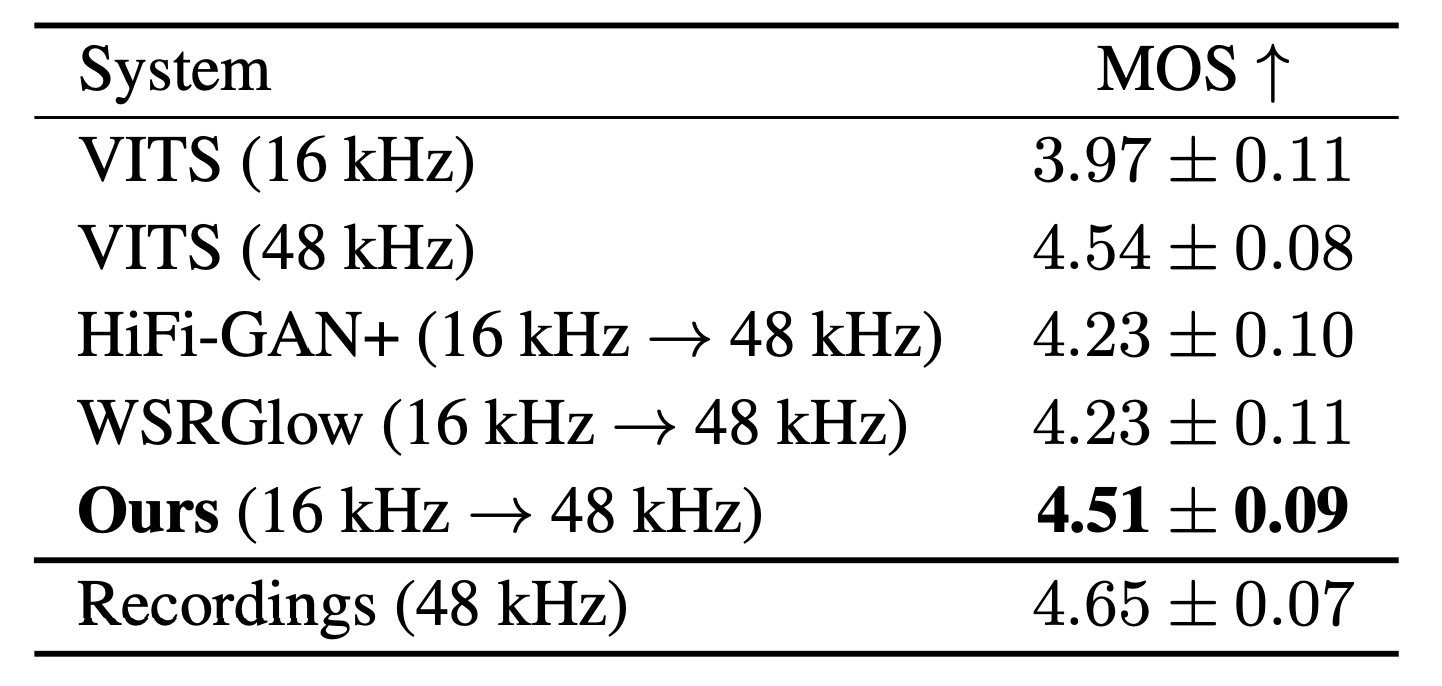

Neural audio super-resolution models are typically trained on low- and high-resolution audio signal pairs. Although these methods achieve highly accurate super-resolution if the acoustic characteristics of the input data are similar to those of the training data, challenges remain: the models suffer from quality degradation for out-of-domain data, and paired data are required for training. To address these problems, we propose Dual-CycleGAN, a high-quality audio super-resolution method that can utilize unpaired data based on two connected cycle consistent generative adversarial networks (CycleGAN). Our method decomposes the super-resolution method into domain adaptation and resampling processes to handle acoustic mismatch in the unpaired low- and high-resolution signals. The two processes are then jointly optimized within the CycleGAN framework. Experimental results verify that the proposed method significantly outperforms conventional methods when paired data are not available.

[Arxiv] [Code]

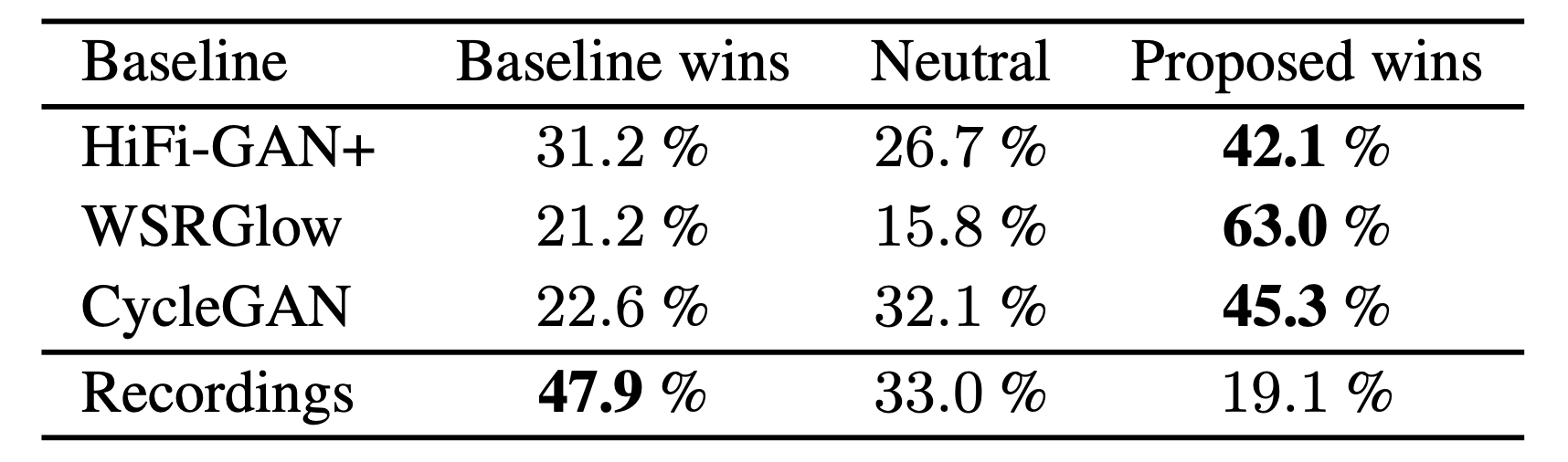

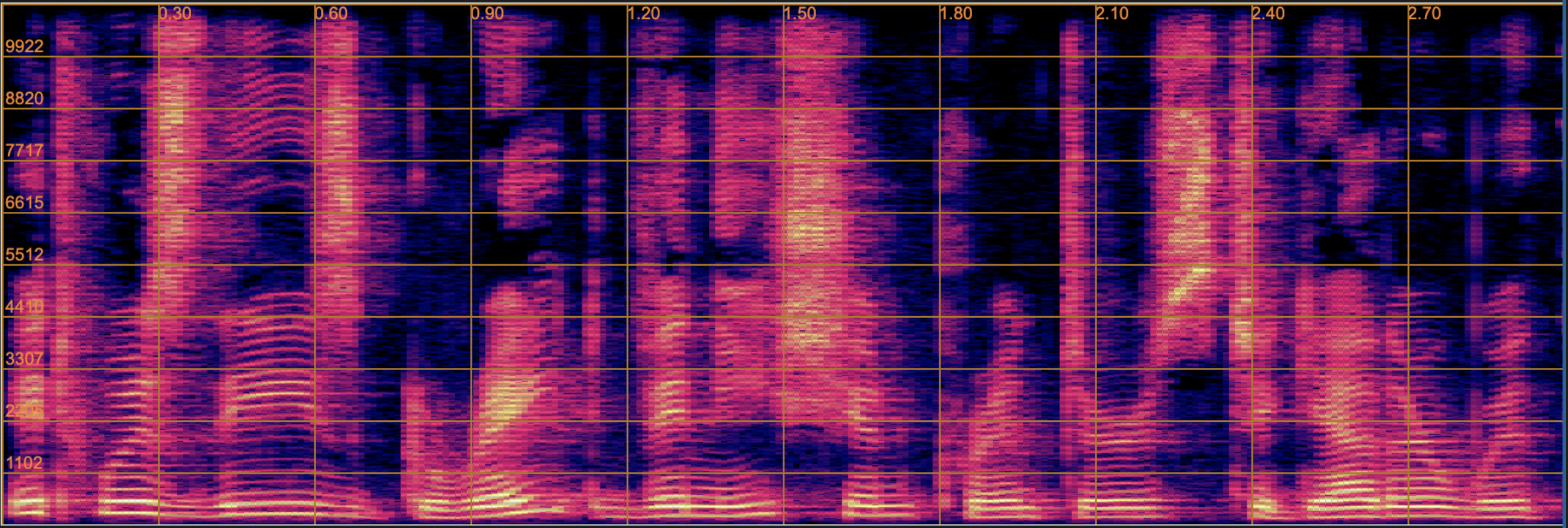

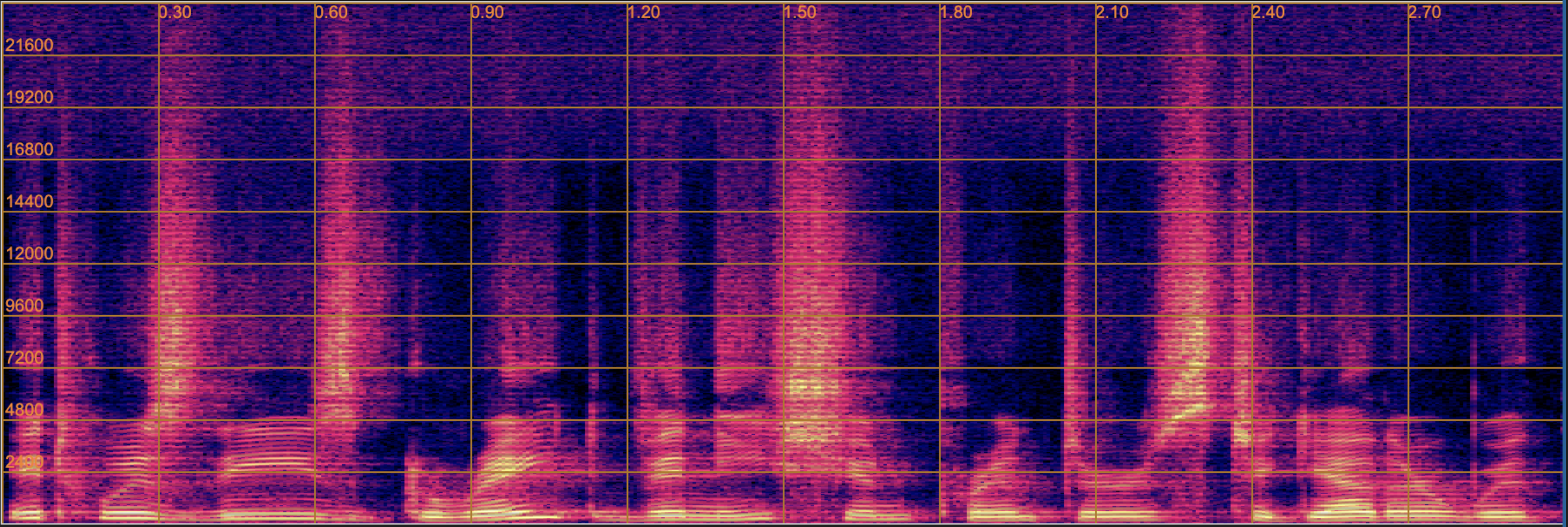

Demo 1: SR Across Different Recording Environments

Here we provide up-sampled speech samples (16 kHz -> 48 kHz) using LJSpeech corpus [1]. The baselines are WSRGlow [2], HiFi-GAN+ [3], and a single CycleGAN [6] based non-parallel SR model. WSRGlow and HiFi-GAN+, which need parallel data for training, were trained on VCTK [5] corpus since the LJSpeech corpus is recorded at 22.05 kHz and paired 16 kHz and 48 kHz audio data are unavailable. On the other hand, CycleGAN and Dual-CycleGAN were trained on LJSpeech and VCTK in a non-parallel training manner. The provided speech samples are randomly selected.

| Target domain

of Dual-CycleGAN |

p351_009 | p362_010 | p364_247 | p374_109 | p376_099 | s5_212 |

|---|---|---|---|---|---|---|

| GT 48

kHz (VCTK corpus) |

| Model | LJ001-0056 | LJ005-0204 | LJ011-0181 | LJ019-0317 | LJ024-0121 | LJ027-0065 | LJ030-0114 | LJ048-0073 |

|---|---|---|---|---|---|---|---|---|

| GT 16 kHz | ||||||||

| GT 22.05 kHz | ||||||||

| WSRGlow | ||||||||

| HiFi-GAN+ | ||||||||

| CycleGAN | ||||||||

| Dual-CycleGAN | ||||||||

|

Dual-CycleGAN w/o Fine-tune |

||||||||

|

Dual-CycleGAN w/o Mel-loss |

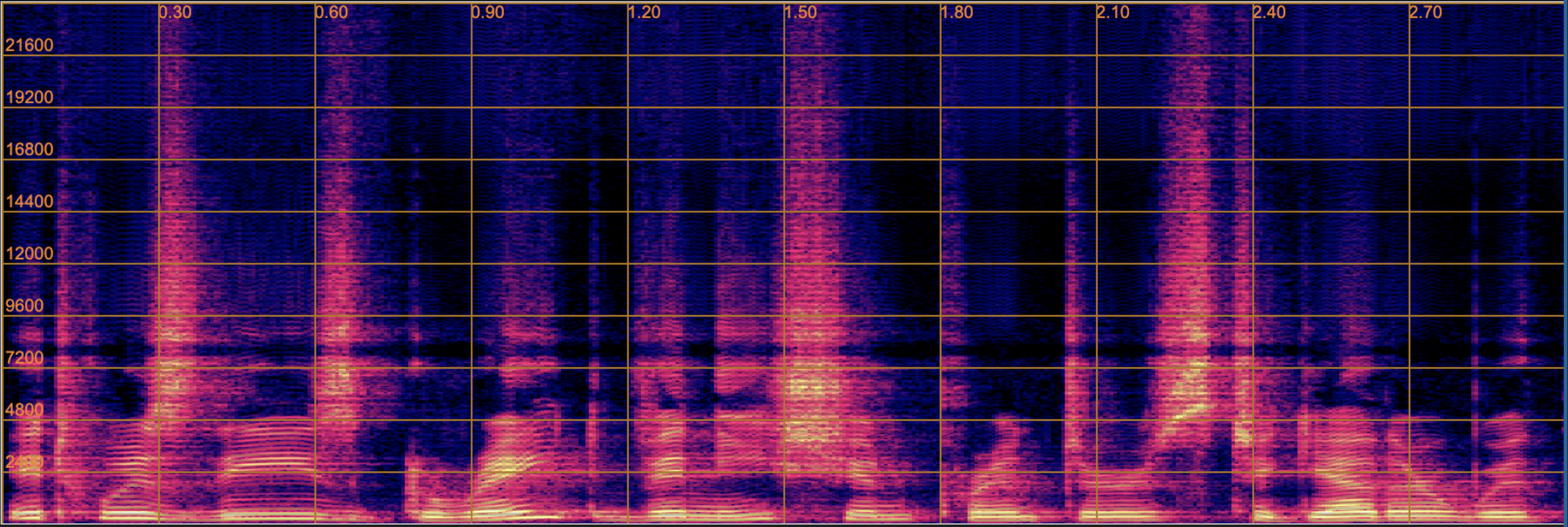

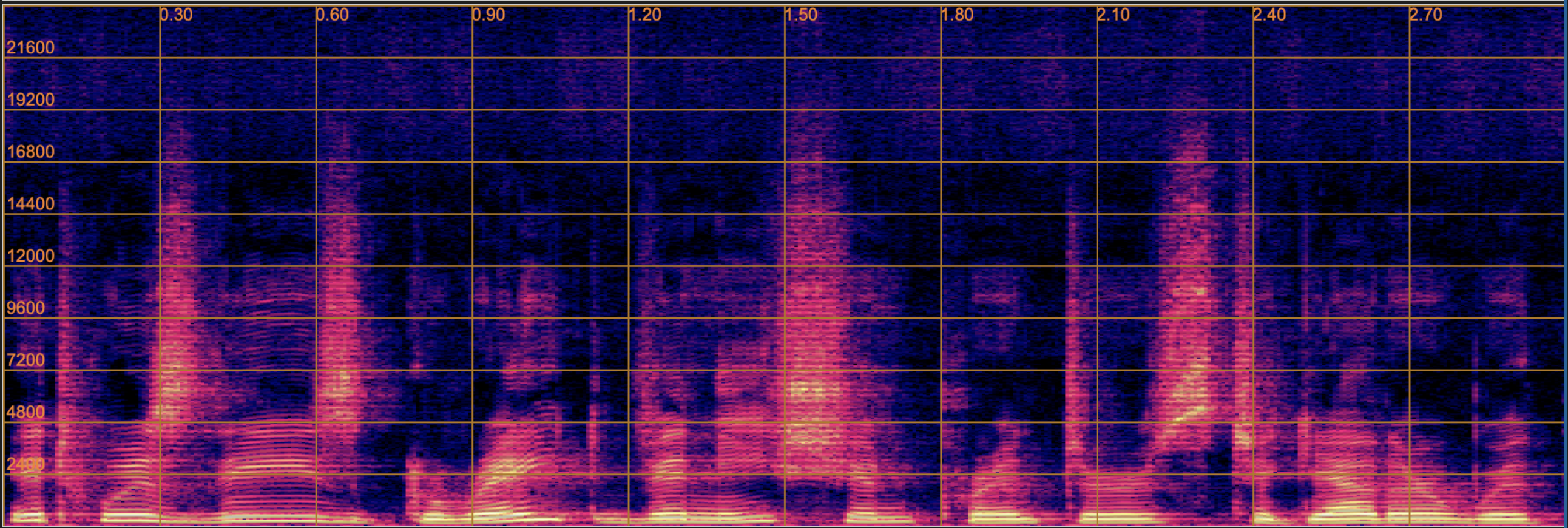

Demo 2: SR on TTS Generated Speech

Here we provide up-sampled speech samples (16 kHz -> 48 kHz) generated from a TTS system. We used VITS [3] as the TTS system. The baselines are WSRGlow, HiFi-GAN+, and a single CycleGAN-based non-parallel SR model. WSRGlow and HiFi-GAN+, which need parallel data for training, were trained on 16 kHz and 48 kHz recorded samples of VCTK corpus since TTS-generated speech has different acoustic features from ground truth speech. CycleGAN and Dual-CycleGAN were trained on TTS-generated and ground truth VCTK in a non-parallel training manner. The provided speech samples are randomly selected.

| Model | p351_008 | p361_413 | p362_168 | p363_023 | p364_220 | p374_282 | p376_022 | s5_358 |

|---|---|---|---|---|---|---|---|---|

| VITS 16 kHz | ||||||||

| VITS 48 kHz | ||||||||

| GT 48 kHz | ||||||||

| WSRGlow | ||||||||

| HiFi-GAN+ | ||||||||

| Dual-CycleGAN |

Citation

@misc{https://doi.org/10.48550/arxiv.2210.15887,

author = {Reo Yoneyama and Ryuichi Yamamoto and Kentaro

Tachibana},

title = {{Nonparallel High-Quality Audio Super

Resolution with Domain Adaptation and Resampling CycleGANs}},

year = {2022},

publisher = {arXiv},

url = {https://arxiv.org/abs/2210.15887},

doi = {10.48550/ARXIV.2210.15887},

copyright = {arXiv.org perpetual, non-exclusive

license}

}

References

[1] K. Ito and L. Johnson, “The LJ Speech Dataset,” https: //keithito.com/LJ-Speech-Dataset/, 2017.

[2] J. Yamagishi, C. Veaux, and K. MacDonald, “CSTR VCTK Corpus: English Multi-speaker Corpus for CSTR Voice Cloning Toolkit,” 2019.

[3] J. Kim, J. Kong, and J. Son, “Conditional Variational Autoencoder with Adversarial Learning for End-to-End Textto-Speech,” in Proc. ICML, 2021, pp. 5530–5540.

[4] K. Zhang, Y. Ren, C. Xu, and Z. Zhao, “WSRGlow: A GlowBased Waveform Generative Model for Audio SuperResolution,” in Proc. Interspeech, 2021, pp. 1649–1653.

[5] J. Su, Y. Wang, A. Finkelstein, and Z. Jin, “Bandwidth Extension is All You Need,” in Proc. ICASSP, 2021, pp. 696– 700.

[6] J.-Y. Zhu, T. Park, P. Isola, and A. A. Efros, “Unpaired Imageto-Image Translation using Cycle-Consistent Adversarial Networks,” in Proc. ICCV, 2017.